Doing CHI together: The benefits of a writing retreat for early-career HCI researchers

Authors:

Ava Elizabeth Scott,

Leon Reicherts,

Evropi Stefanidi

Posted: Tue, April 16, 2024 - 10:30:00

The deadline for the CHI conference is an important event on an HCI researcher’s calendar. For CHI 2023, more than 3,000 papers were submitted and only 28 percent were accepted. With such a high number of submissions and low acceptance rate, researchers face pressure to make the best submission possible. So how can we best introduce early-career researchers to the charms and quirks of writing a CHI paper while supporting them in submitting the best possible paper they can?

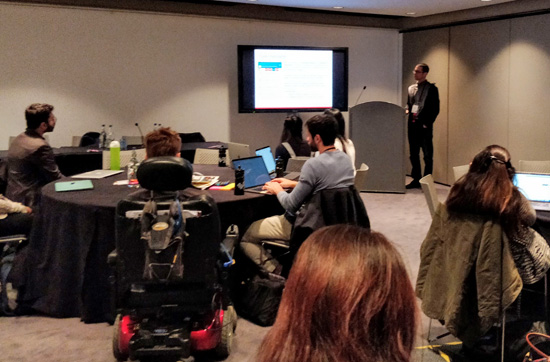

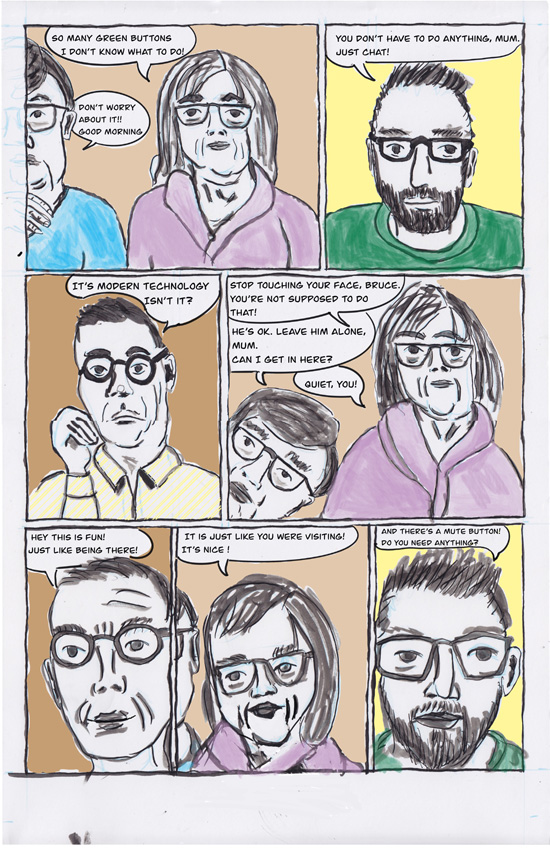

For the past two years, the University of St. Gallen in Switzerland has hosted a writing retreat called CHI Together, which brings researchers together for two and a half weeks directly prior to the CHI deadline. While many of these researchers are experienced CHI authors, some are engaging in this process for the first time. During the inaugural writing retreat held in 2022, attendees submitted a total of a dozen papers. The idea behind the retreat was to help participants collaborate efficiently and manage the stressful phase before the deadline in the company of like-minded people; and then to celebrate their submissions. Hoping to reiterate and build on this success, this year the computer science department at St. Gallen invited 21 researchers to come together, physically and collaboratively, in the run-up to the deadline for CHI 2024.

This endeavor was made possible by funding from the SIGCHI Development Fund and from the University of St. Gallen. We are hugely grateful for this support, which facilitated the many healthy social activities and group meals, as well as financed accommodation and travel expenses. Here we describe these activities, starting from our first day arriving in St. Gallen to the final delirious day of submissions and subsequent celebration. We then reflect on the intensity of the CHI deadline and recommend that early-career researchers be supported with social and recreational resources, as well as pragmatic and technical measures.

Arriving by plane and train on a warm day in late August, we were immediately struck by the fact that these services were on time. As students from the U.K. and Germany, Swiss timeliness is always a nice surprise. We reunited with the other visiting researchers, new and familiar faces alike. Our apartments were a 10-minute walk from the computer science building in the center of St. Gallen, halfway up a steep hill. While it was an easy jaunt down to the office, there was a significant disincentive to leave the office: the hike between you and your bed. Though this felt like a slog at the time, the daily exercise surely benefited us. These apartments were our home for the next two and a half weeks, but we spent much more time at the office.

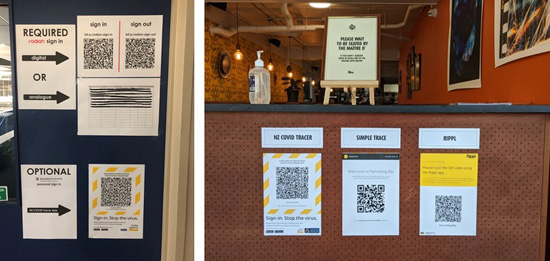

Sharing an open office environment catalyzed natural collaboration, as well as companionship and social breaks during the workday.

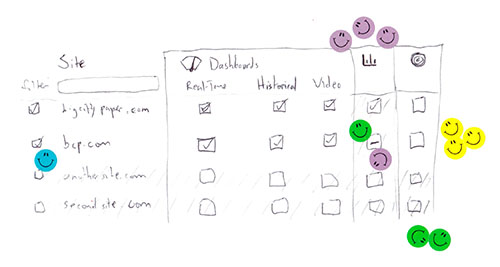

In the computer science building, we each had desk space with a display monitor and a comfortable chair. In anticipation of the numerous hours we would spend writing, an ergonomic setup was a priority. In this open office setting, students collected data, analyzed it, and scratched their heads debating over the structure and presentation of their results.

A selection of irreverent and humorous Ph.D. memes were spread over the desks.

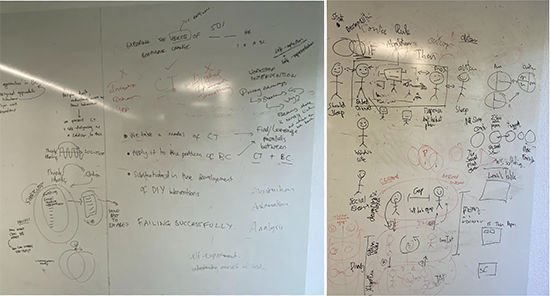

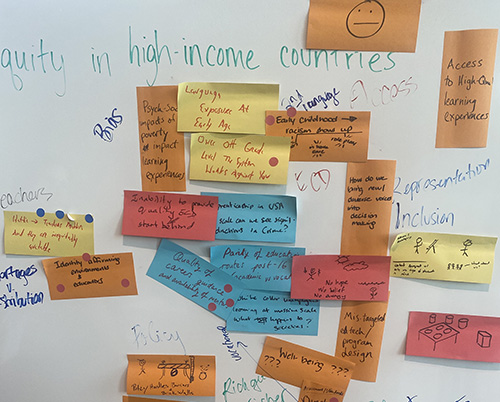

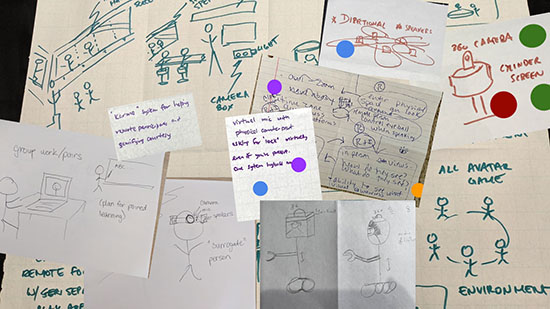

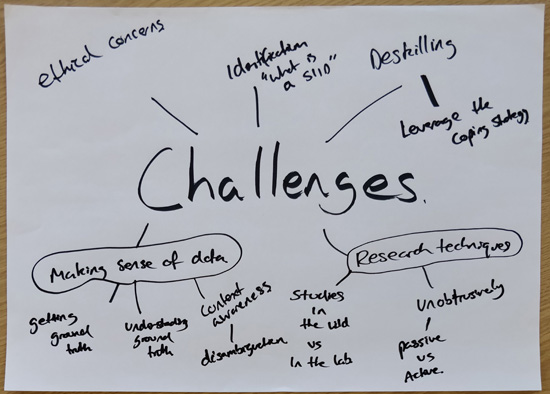

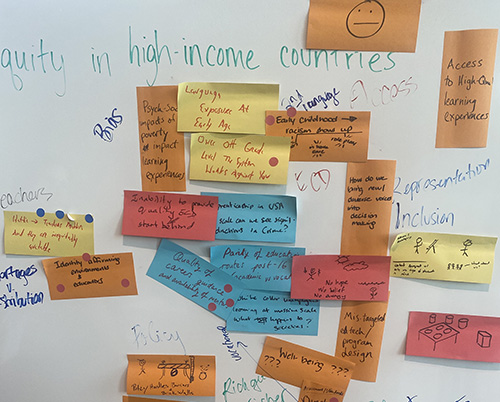

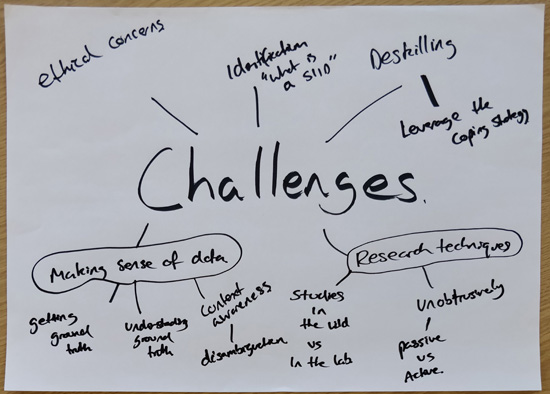

Knowledge exchange sessions were organized to support these processes, including sessions on qualitative analysis and how to write a CHI paper. We swapped abstracts and drafts with one another to get feedback on our efforts. In addition to receiving guidance on analysis and writing, we also created and shared analytical tools, such as a transcription and speaker-segmentation pipeline, which we used to transcribe more than 100 interviews across different research projects. Using the wall-size whiteboards around the office, we collectively sketched and resketched papers’ structure, argumentation, models, and figures. By distributing our thinking across multiple brains and the physical space, we could work through hard problems with greater speed and clarity.

This intensive brain activity required fuel. Most days, we took turns making group lunches in the lab kitchen—usually an elaborate salad with fresh bread and cheese. By sharing lunch duties, we reduced the overall time spent on meal preparation, while also eating well. As the deadline got closer and the days became longer, we frequently honored the tradition of grabbing doner kebab or Thai takeaway for dinner.

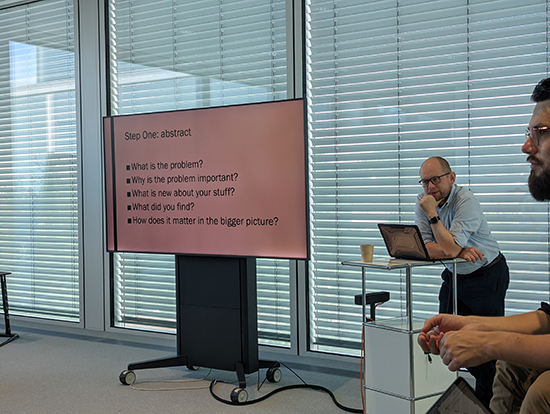

Paweł W. Woźniak presented the key elements of an abstract.

There were also several optional activities that provided a much-needed break. We hiked the nearby mountains, took a dip in the local mountain lakes, indulged in manic dance and workout breaks, went to the cinema, and shared pizza and burgers in the evenings. Furthermore, a contingent of researchers attended the Mensch und Computer conference in nearby Rapperswil, enabling further exchange with the D-A-CH (Germany, Austria, and Switzerland) HCI community. Postdocs and those in their final year of Ph.D. research also attended an information session about research funding opportunities across Switzerland and the rest of Europe, organized by the research office at St. Gallen. This balance of intellectual, recreational, and forward-looking activities helped balance the otherwise intensive writing schedule.

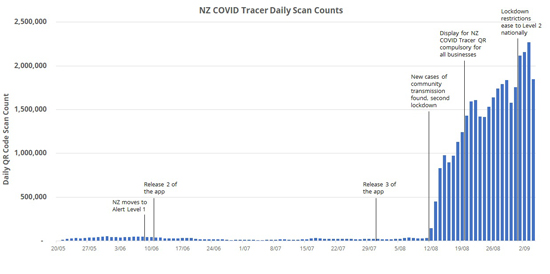

Immersed in this culture of dedicated focus, we collectively submitted more than a dozen papers for CHI 2024. One supervisor said that their students’ writing during this period was the clearest and most effective of their studies so far. While some of us were clicking the submit button at the very last moment (2 p.m. on September 15 in our time zone), we all got our papers in on time and celebrated with drinks and snacks in the lab. Despite many of us having pulled all-nighters, the drinks continued until 2 a.m. We said heartfelt goodbyes, and left for our respective destinations the following morning, looking forward (with slight trepidation!) to receiving our reviews in a few weeks.

The collective whiteboards were the site of active collaborative thinking, sketching iterations of models, figures, and argumentation.

Large, top-tier conferences such as CHI are the critical sites for presenting work, receiving feedback, and networking with peers and future collaborators. However, due to the appropriately high standards set for paper acceptance and the cost of in-person attendance, such conferences are not always accessible to early-career scholars. The CHI Together model of collaborative writing before the deadline provides support in multiple ways, offering an encouraging, knowledgeable community to help steer research work and frame completed work following best practices for different submission types. CHI Together also supports recreational activities that allow early-stage scholars to network and build community; we believe that such community building means scholars will have an established cohort for continued mutual support in their everyday work setting as well as at conferences, when they are able to attend them. These meaningful relationships with peers are perhaps even more lasting than connections made at conferences.

Plans for CHI Together 2024 are in the works, potentially hosted elsewhere in Europe, but aiming to maintain the same level of focus, support, and solidarity in the run-up to the deadline for CHI 2025. For those of you interested in hosting a similar writing retreat, and who would appreciate some guidance or blueprints, don’t hesitate to get in touch with us or Johannes Schöning of the University of St. Gallen. Or if you are running similar events, please do share your approach with us.

Posted in:

on Tue, April 16, 2024 - 10:30:00

Ava Elizabeth Scott

Ava Elizabeth Scott is a Ph.D. candidate at University College London. As part of the Ecological Study of the Brain DTP, her current research prioritizes ecological validity by using interdisciplinary approaches to investigate how metacognition and intentionality can be supported by technological interfaces .

[email protected]

View All Ava Elizabeth Scott's Posts

Leon Reicherts

Evropi Stefanidi

Training for net zero carbon infrastructure through interactive immersive technologies

Authors:

Muhammad Zahid Iqbal,

Abraham Campbell

Posted: Wed, March 06, 2024 - 3:00:00

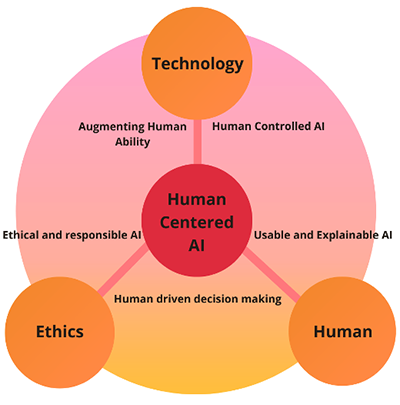

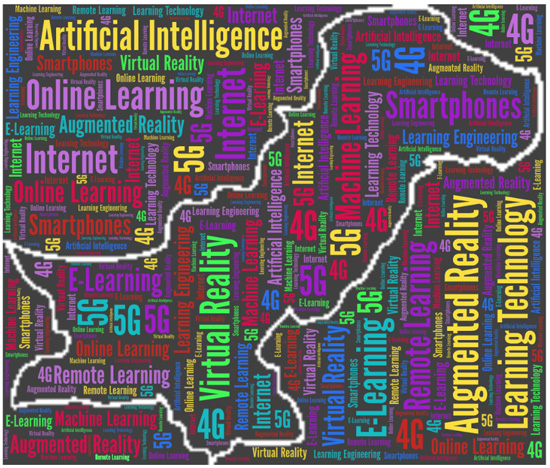

By providing realistic and engaging learning experiences, immersive technologies such as virtual reality (VR), augmented reality (AR), mixed reality (MR), 360-degree video, and simulation have proven to be effective in industrial training contexts. These technologies have been adopted for various purposes, including healthcare [1], teacher training, and nuclear power plant training [2].

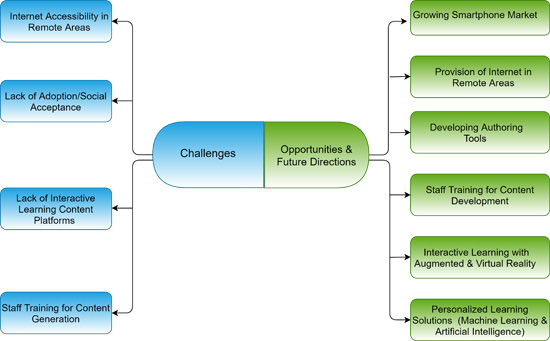

Climate change is a global challenge that requires coordinated action from all sectors of society. In order to combat climate change, CO2 emissions need to fall to zero. One of the key strategies in addressing this challenge is to achieve net zero carbon emissions by 2050 [3]. Essential to achieving this goal is the adoption of net zero carbon infrastructure [4], which can reduce reliance on fossil fuels, enhance the efficiency and resilience of energy systems, and support the development of low-carbon technologies in industry. Net zero carbon infrastructure includes renewable energy resources such as wind, solar, nuclear, and hydro; low-carbon transport modes such as electric vehicles; and carbon-capturing, energy-efficient buildings [5] and appliances. There is an urgent need to invest in net zero carbon infrastructure to improve air quality, promote healthy lifestyles, and improve social equity and environmental justice. Net zero carbon infrastructure is therefore not only a necessity but also an opportunity to build a more sustainable and prosperous future for all. This post explores the opportunities and challenges of using immersive technologies for net zero carbon infrastructure training.

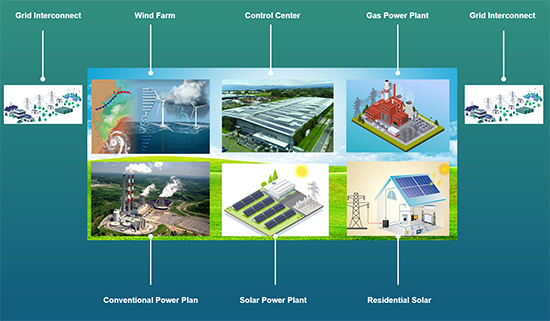

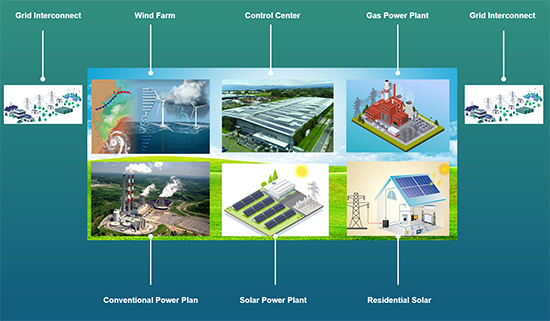

Main components of net zero carbon infrastructure.

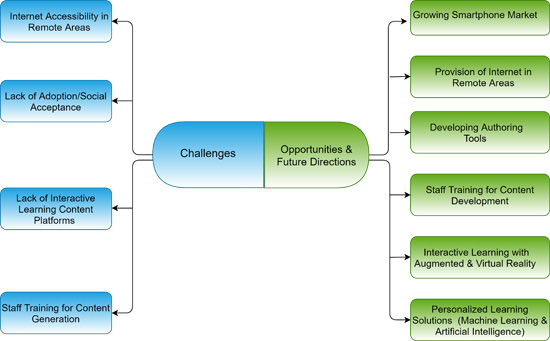

Immersive technologies can potentially revolutionize training for net zero carbon infrastructure development and maintenance. Potential opportunities include:

- Reducing training costs, risks, and errors by eliminating the need for physical resources, travel, and instructors.

- By using immersive technologies, trainees can learn and practice the skills and knowledge required for net zero infrastructure in a realistic and immersive way without the need for physical resources, travel, and instructors. A perfect example of this is in wind farms, where maintenance of a turbine may take place over 50 meters above the ground. Immersive technologies can save time, money, and resources, as well as improve safety and quality.

- Creating realistic simulations of dangerous or hazardous environments that workers may encounter in their jobs, such as oil spills, fires, or explosions. By using immersive technologies, workers can learn how to handle these situations safely and effectively without exposing themselves to physical harm. Therefore, immersive technologies can enhance the safety and efficiency of net zero infrastructure training.

- Using immersive technologies in net zero infrastructure training can provide data and feedback on learner performance, behavior, and progress. Trainers can monitor and assess how learners interact with the virtual environment, what choices they make, how they solve problems, and how they apply their skills and knowledge. This data and feedback can be used to improve training design and delivery by identifying strengths and weaknesses, providing personalized guidance, and adapting the level of difficulty and complexity.

- Enhancing the learning experience by creating a more engaging and interactive environment for learners or trainees. By providing learners with realistic and immersive scenarios, these interventions can stimulate their senses, emotions, and cognition, as well as provide immediate feedback and guidance. Therefore, immersive technologies can help to foster a more effective and enjoyable learning process.

- Leveraging the latest revolution ingenerative AI integration, immersive training can be made more productive, personalized, and content-driven by instructional designers. As generative AI moves forward, its ability to facilitate training will only improve in its ability to generate text, image, video, and even 3D avatars to assist the trainee.

- Using haptic devices in immersive training is the next step forward, as compared with touchless hand interaction or nonhaptic, controller-based interaction it can achieve greater realism within a training environment. The haptic sense in training has been proven to be one of the best feedback methods for users.

There are also challenges posed in adopting these technologies that need to be addressed. Some of the main challenges include:

- Acquiring immersive training resources for the first time can be costly and resource intensive because it requires specialized equipment, software, and content development. Depending on the complexity and quality of the simulations, the initial investment and maintenance costs can be high, but most of this is a long-term investment.

- Implementing immersive training infrastructure may not be compatible with existing systems and platforms, which can create technical difficulties and integration issues. Also, immersive training facilities require more technical support and troubleshooting initially than other forms of training.

- Accessibility is a commonly discussed issue with immersive training. But with the latest developments in immersive hardware, immersive training material can be designed with accessibility and inclusivity in mind.

- Implementing new initiatives such as using immersive technologies for learning may face resistance from trainees, who may fear adopting new technologies. Therefore, there is a need for a convincing strategy, such as adopting the latest versions of the technology acceptance model (TAM) [6] and communicating about the value of immersive training, as well as providing adequate training and support.

All of these challenges are not insurmountable and can be overcome with careful planning, evaluation, and collaboration. It is worth considering how we might leverage these technologies to achieve net zero industrial training goals. One way to address the cost challenge is to develop more-affordable immersive technologies such as mobile AR/VR; even the makers of the latest devices are working on reducing the cost, such as the latest Meta VR headset Quest 3. As advances in XR devices proceed rapidly, with year-on-year improvements, Meta and other companies such as Microsoft and HTC should consider allowing users to return their old devices to be refurbished or recycled for a discount on new devices. This will reduce costs for XR users who want the latest devices and reduce the environmental impact.

Another way to address the challenge of accessibility is to make immersive training more accessible to people in developing countries. This can be done by providing training on how to use immersive technologies and by developing immersive training programs tailored to the needs of developing countries. Of course, there are challenges of usability, which can be addressed by designing immersive training programs that are easy to use and more user-friendly.

Case studies from different industrial training contexts show immersive technologies have the potential to play a significant role in training for net zero carbon infrastructure. By addressing the challenges, we can ensure that immersive technologies can help revolutionize net zero workforce training for a sustainable future.

Endnotes

1. Brooks, A.L. Gaming, VR, and immersive technologies for education/training. In Recent Advances in Technologies for Inclusive Well-Being: Virtual Patients, Gamification and Simulation. Springer, 2021, 17–29.

2. opov, O.O. et al. Immersive technology for training and professional development of nuclear power plants personnel. Proc. of the 4th International Workshop on Augmented Reality in Education, 2021.

4. Bouckaert, S. et al. Net Zero by 2050: A Roadmap for the Global Energy Sector. International Energy Agency, 2021.

5. Kennedy, C.A., Ibrahim,N., and Hoornweg,D. Low-carbon infrastructure strategies for cities. Nature Climate Change 4, 5 (2014), 343–346.

6. Thomas, R.J., O'Hare, G., and Coyle, D. Understanding technology acceptance in smart agriculture: A systematic review of empirical research in crop production. Technological Forecasting and Social Change 189 (2023),122374.

Posted in:

on Wed, March 06, 2024 - 3:00:00

Muhammad Zahid Iqbal

Muhammad Zahid Iqbal is an assistant professor in immersive technologies at Teesside University. He also works as an associate faculty member at the University of Glasgow. He completed Ph.D. in computer science with a specialization in immersive technologies from University College Dublin. His research vision is to explore the convergence of immersive technology, digital twins, the metaverse, and digital transformations.

[email protected]

View All Muhammad Zahid Iqbal's Posts

Abraham Campbell

Abraham Campbell is an assistant professor at University College Dublin, Ireland. He also served as faculty member of Beijing-Dublin International College, a joint initiative between UCD and BJUT. He is a Funded Investigator for the CONSUS SFI Center and was a Collaborator on the EU-Funded AHA—AdHd Augmented Project.

[email protected]

View All Abraham Campbell's Posts

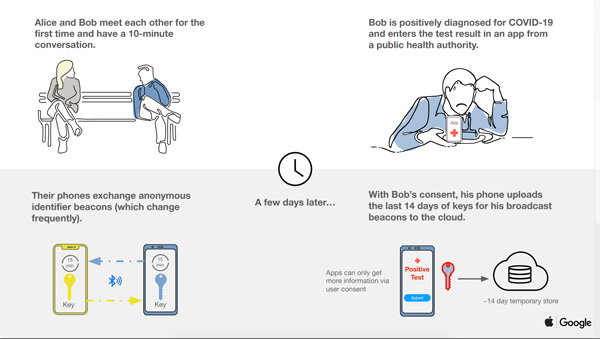

The unsustainable model: The tug of war between LLM personalization and user privacy

Authors:

Shuhan Sheng

Posted: Thu, January 04, 2024 - 11:32:00

In the AI universe, we find ourselves balancing on the edge of a tightrope between groundbreaking promise and perilous pitfalls, especially with those LLM-based platforms we broadly label as “AI platform.” The allure is magnetic, but the risks? They’re impossible to ignore.

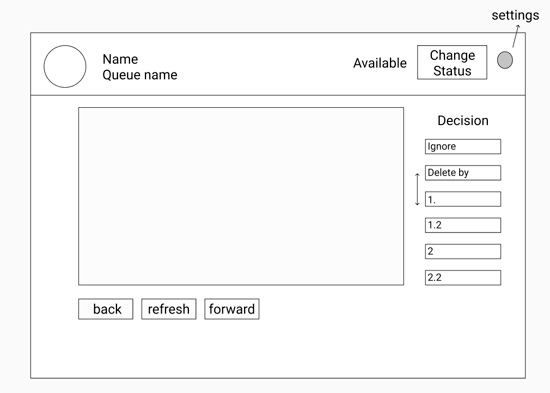

AI Platform’s Information Interaction and Service Features: An Unsustainable Model

Central to every AI platform is its adeptness in accumulating, deciphering, and deploying colossal data streams. Such data, often intimate and sensitive, is either freely given by users or, at times, unwittingly surrendered. The dynamic appears simple: You nourish the AI with data, and in reciprocation, it bestows upon you tailored suggestions, insights, or solutions. It’s like having a conversation with a very knowledgeable friend who remembers everything you’ve ever told them. But here’s the catch: Unlike your friend, AI platforms store this information, often indefinitely, and use it to refine their algorithms, sell to advertisers, or even share with third parties.

The current mechanisms of information exchange between AI platforms and users can be likened to a two-way street with no traffic lights. On one flank, users persistently pour their data, aspiring for superior amenities and encounters. Conversely, AI platforms, with their insatiable quest for excellence, feast upon this data, often devoid of explicit confines or oversight. Such unbridled data interchange has culminated in palpable apprehensions, predominantly surrounding user privacy and potential data malfeasance [1].

Following this unchecked two-way data street, the unsustainable model now forces a dicey trade-off between “personalized experiences” and “personal data privacy.” This has led to a staggering concentration of user data on major AI platforms, at levels and depths previously unimaginable. What’s more alarming? This data pile just keeps growing with time. And let’s not kid ourselves: These AI platforms, hoarding mountains of critical user data, are far from impenetrable fortresses. A single breach could spell disaster.

One of the most recent and notable incidents involves ChatGPT. During its early deployment, there was an inadvertent leak of sensitive commercial information. Specifically, Samsung employees reportedly leaked sensitive confidential company information to OpenAI’s ChatGPT on multiple occasions [2]. This incident not only caused a stir in the tech community but also ignited a broader debate about the safety and reliability of AI platforms. The inadvertent leak raised concerns about the potential misuse of AI in business espionage, the risk of exposing proprietary business strategies, and the potential financial implications for companies whose sensitive data might be inadvertently shared.

Whether we like it or not, we need to see and face this fact. The current rapidly growing information interaction model based on traditional user data storage methods is unsustainable. It’s a ticking time bomb, waiting for the right moment to explode. And unless we address these issues head-on, we are bound for a digital disaster.

User Experience Design Based on This Mechanism: Subsequent Problems and Challenges

Beyond the lurking privacy threats and the looming digital apocalypse, this unsustainable info-exchange model is already a thorn in the user experience side. Let’s dive deeper into these annoyances to grasp the gravity of the situation.

The cross-platform invocation dilemma. Major AI platforms operate in silos, creating a fragmented ecosystem where user data lacks interoperability. With the advent of new models and platforms, this fragmentation is only intensifying [3]. Imagine having to introduce yourself every time you meet someone, even if you’ve met them before. That’s the predicament users find themselves in. Every time they switch to a new AI platform, they’re forced to retrain the system with their personal data to receive customized results. This not only is tedious but also amplifies the risk of data breaches. It’s like giving out your home address to every stranger you meet, hoping they won’t misuse it.

Inefficiencies in historical interaction records. The current AI models have a flawed approach to storing and managing historical interaction records [4]. Take ChatGPT, for instance. Even within the platform, one session’s history can’t give a nod to another’s. It’s like they’re strangers at a party. Users struggle to retrieve past interactions, making the entire process of data retrieval cumbersome and inefficient. This inefficiency not only frustrates users but also diminishes the value proposition of these platforms.

Token overload in single channels. Information overload is a real concern. When a single channel is bombarded with excessive information, the AI platform’s performance takes a hit [5]. It’s like trying to listen to multiple radio stations at once; the result is just noise. The current model’s technical limitations become evident as it struggles to scale with increased user interaction, leading to slower response times and a degraded user experience.

A Call for Change

As we draw the curtains on our discussion, it’s evident that the current AI ecosystem, while revolutionary, is far from perfect. The model’s unsustainability is not just a theoretical concern but rather a tangible reality that users grapple with daily.

The complexity of data misuse is a significant concern. Both active and passive data misuse are like icebergs—what’s visible is just the tip, and the real danger lurks beneath the surface. These misuses are not only concealed but also highly unpredictable. It’s akin to navigating a minefield blindfolded; one never knows when or where the next explosion will occur.

Relying solely on corporate responsibility and legal regulations is akin to putting a band-aid on a gunshot wound. While these measures might offer temporary relief, they don’t address the root cause of the problem. The need of the hour is a fundamental change. We must advocate for a deeper, more profound, root-level redesign of the information interaction mechanisms between users and AI platforms. It’s not just about patching up the existing system but envisioning a new one that prioritizes user experience, privacy, and security.

The AI ecosystem is at a crossroads. We can either continue down the current path, ignoring the glaring issues, or we can take the bold step of overhauling the system. The choice is clear: For a more sustainable, ethical, and user-friendly AI future, change is not just necessary; it’s imperative.

Endnotes

1. Harari, Y.N. 21 Lessons for the 21st Century. Spiegel & Grau, 2018.

2. Greenberg, A. Oops: Samsung employees leaked confidential data to ChatGPT. Gizmodo. Apr. 6, 2023; https://gizmodo.com/chatgpt-ai...

3. Forbes Tech Council. AI and large language models: The future of healthcare data interoperability. Forbes. Jun. 20, 2023; https://www.forbes.com/sites/f...

4. Broussard, M. The challenges of AI preservation. The American Historical Review 128, 3 (Sep. 2023), 1378–1381; https://doi.org/10.1093/ahr/rh...

5. Vontobel, M. AI could repair the damage done by data overload. VentureBeat. Jan. 4, 2022; https://venturebeat.com/datade...

Posted in:

on Thu, January 04, 2024 - 11:32:00

Shuhan Sheng

An entrepreneurial spirit and design visionary, Shuhan Sheng cofounded an industry-first educational-corporate platform, amassing significant investment. After honing his craft in interaction design at ArtCenter College of Design, he now leads as chief designer and product director for two cutting-edge AI teams in North America. His work has garnered multiple international accolades, including FDA and MUSE Awards.

[email protected]

View All Shuhan Sheng's Posts

Body x Materials @ CHI: Exploring the intersections of body and materiality in a full-day workshop

Authors:

Ana Tajadura-Jiménez ,

Bruna Petreca,

Laia Turmo Vidal,

Ricardo O'Nascimento ,

Aneesha Singh

Posted: Wed, January 03, 2024 - 2:31:00

During the 2023 CHI conference, we ran a one-day workshop to consider the design space at the intersection of the body and materials [1]. The workshop gathered designers, makers, researchers, and artists to explore current theories, approaches, methods, and tools that emphasize the critical role of materiality in body-based interactions with technology. We were motivated by developments in HCI and interaction design over the past 15 years, namely the “material turn,” which explores the materiality of technology and computation and methods for working with materials, and “first-person” approaches emphasizing design for and from lived experience and the physical body. Recognizing the valuable contributions of approaches that foregrounded materiality [2] and the body [3] in HCI, we proposed to explore the intersection of these two turns.

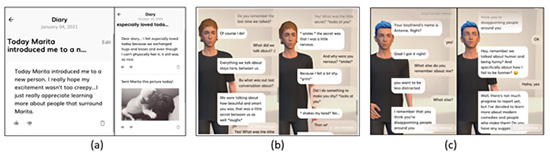

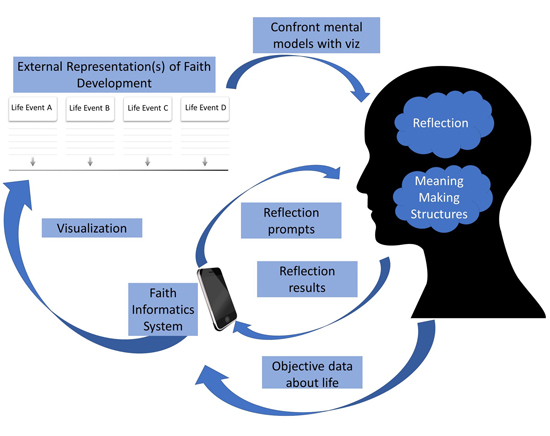

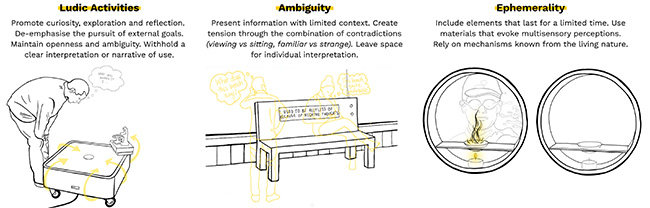

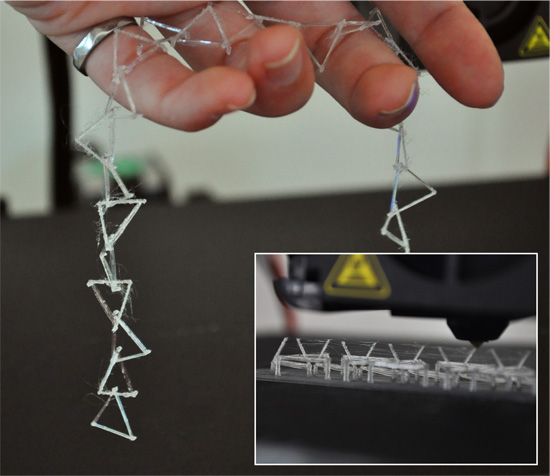

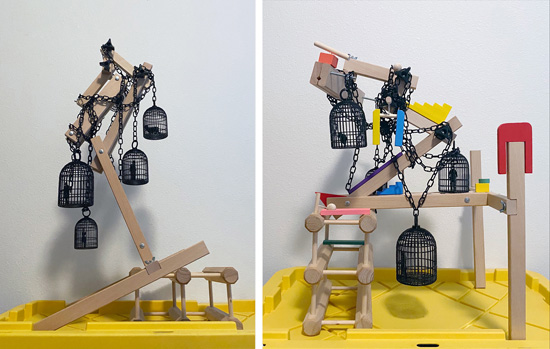

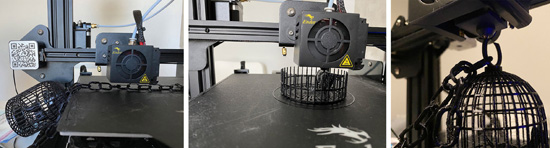

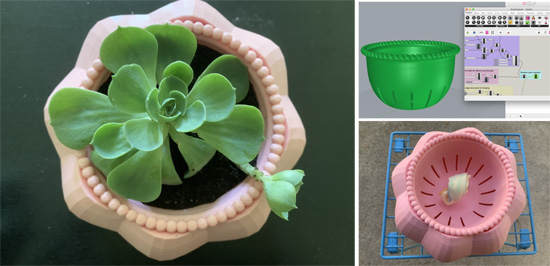

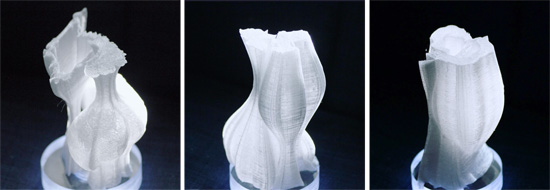

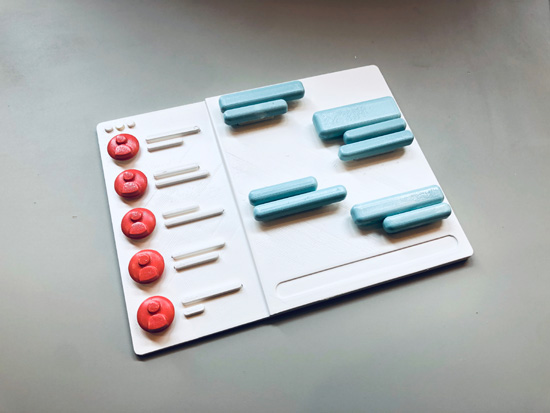

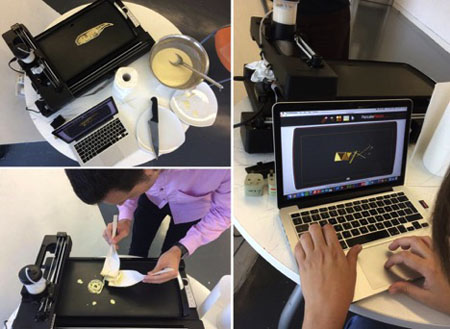

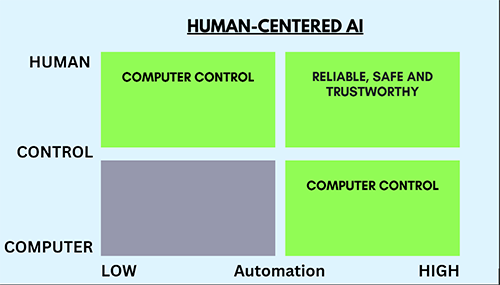

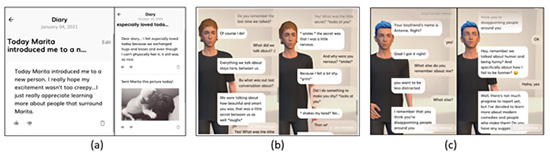

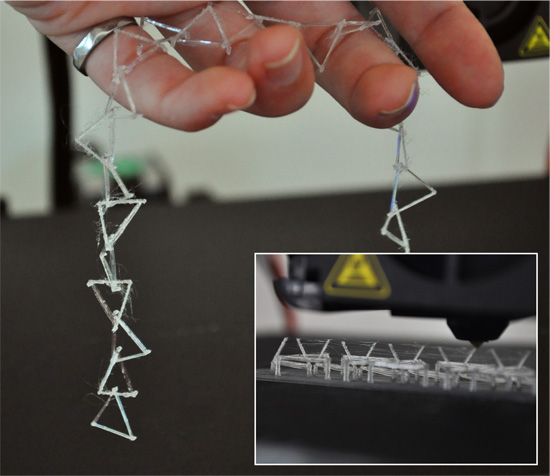

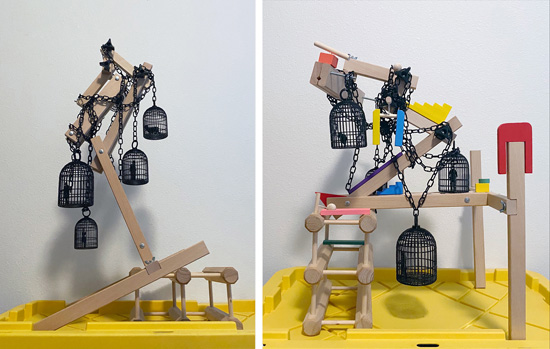

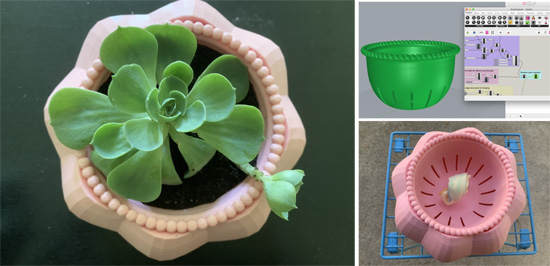

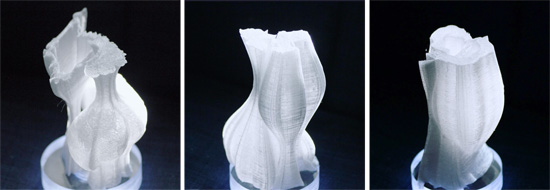

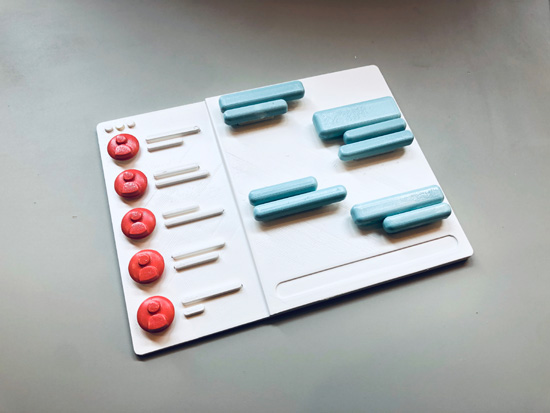

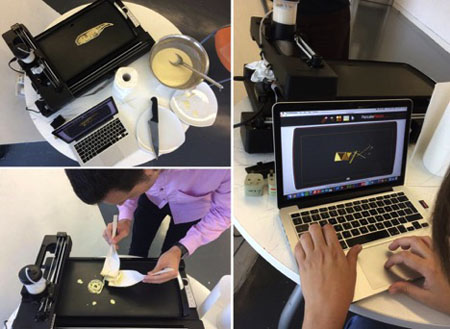

Material interactions is a field of wide-ranging interest to HCI researchers, starting with research on the design and integration of physical and digital materials to create interactive and embodied experiences [4], progressing to a growing interest in the contextual aspects of material interactions and their impact on personal and social experiences [2], and culminating in formalized method propositions such as “material-centered interaction design” [5]. The latter is a fresh approach, urging interaction designers to broaden their view beyond the capabilities of the computer and embrace a practice that imagines and designs interaction through material manifestations. Similarly, the role of the body in design has gained traction, through approaches such as soma design [3], embodied interaction, and movement-centric methods [6]. These have positioned the centrality of first-person accounts and physical engagement to research and design from the body, shifting the design space beyond security and efficiency, which are primary goals, for example, in the field of human factors, to deal with other dimensions such as fun/joy, play, entertainment, mental health interventions, and so on. In the workshop, the methods and prototypes brought in aimed to explore experience, for instance in terms of perceptions and feelings toward materials (Figure 1) or toward the person’s own body (Figure 2). In both cases, the interaction with the prototypes brings the subjective experience into focus. We share a keen interest with previous research in working across physical and digital materials, as well as in centering the body in design. Additionally, we have a particular interest in the intersection of these approaches in which we find a new design space: making the body central material in designing experiences, going beyond a view of the body as the medium with which to explore the material—its potentials, its affordances—instead including the body as part of the material being designed with and for. We are excited to develop this design space through a formal proposition, as we progress with our research.

Our proposed approach is both timely and critical, as it addresses the complex interplay between the body and emerging technologies. However, this approach is not free from challenges, as discussed in the workshop. These technologies not only have a tangible impact but also influence immaterial aspects, creating unpredictable outcomes that must be carefully considered during the design phase. As technologies increasingly become a ubiquitous part of our lives, they affect our bodies in both physiological and psychological ways. Furthermore, moving this research beyond the confines of a laboratory not only introduces ethical concerns in relation to health, well-being, safety, and social impact, but also opens new opportunities. For example, in terms of social impact, the technologies can directly affect not just individual bodies, but also the interpersonal relationships those individuals maintain. Therefore, any interaction with embodied technologies carries real-world implications that must be approached with caution, but also with curiosity about the opportunities they may open.

The Workshop

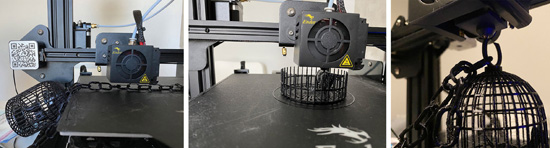

Throughout our day-long event, attendees took part in various activities and were organized into four thematic groups: material enabling expression; material as a catalyst for human action; material enabling reflection, awareness, and understanding; and material supporting the design process for (re)creating the existing and the yet-to-exist.

In the first activity, participants shared their prototypes or methods within their distinct groups. Prototypes were examined in relation to each group’s theme and participants individually noted similarities, differences, and opportunities among them. The second activity mixed groups for participants to discuss key points across themes, to uncover overlaps and opportunities.

<

<

Figure 1. One of the methods (Materials Gym) presented at the workshop. EMG is used alongside a smartphone application to continuously collect data on people’s interaction with materials: Speed and aspects of movement reveal information about the experience in relation to people’s perceptions of and preferences for materials qualities (see https://discovery.ucl.ac.uk/id/eprint/10172204/).

Figure 2. A physical prototype (Soniband) that one participant brought to the workshop. This is a wearable band that provides real-time sonification of movement through a variety of sounds, some of which build on material metaphors, such as water, wind, or mechanical gears, and which can impact on the wearer’s body perceptions and feelings, movement, and emotional state (see https://doi.org/10.1145/3411764.3445558; https://doi.org/10.1145/2858036.2858486)

To increase enthusiasm into our exploration, the workshop featured a panel with four guest speakers who presented their research through a provocation: Kristii Kuusk (Estonian Academy of Arts) talked about the impact of material on people’s bodies combining affordances of technology and traditional materials; Pedro Lopes (University of Chicago) talked about making the body the material from which we build the actuator of the designed technologies; Hasti Seifi (Arizona State University) talked about the need for integrating basic research on haptic sensory perception and language into software tools for designing body-based haptic experiences; Paul Strohmeier (Max Planck Institute for Informatics) talked about how material and body experiences are shaped by agency, control, and reflective processes. The panelists conversed about material experiences and engaged in a Q&A session with participants. To conclude, participants mapped the design space of “body x materials” with their original groups, considering challenges, opportunities, state-of-the-art, theories, and methodologies.

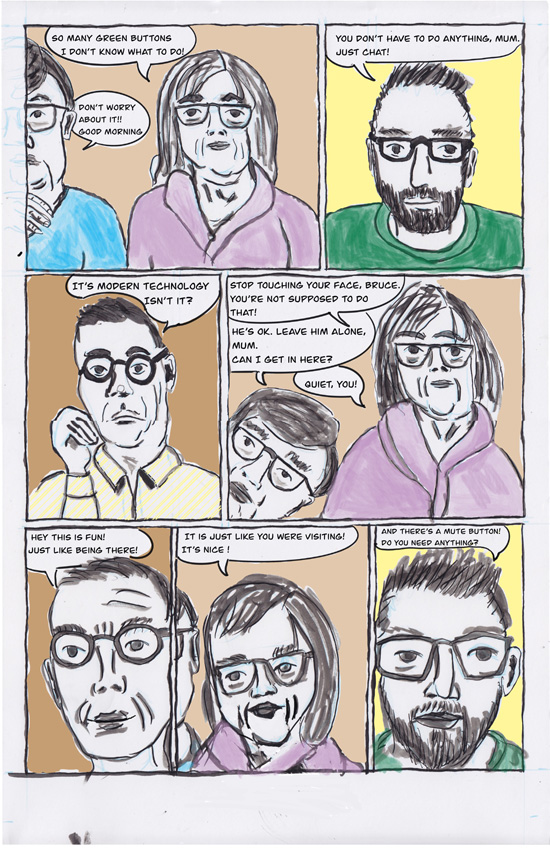

Figure 3. Participants engaging in workshop activities.

The diversity of backgrounds of workshop participants (position papers available at www.rca.ac.uk/body-materials) resulted in multidisciplinary discussion groups where different disciplines, theories, methodologies and application domains were considered (including HCI, interaction design, neuroscience, arts and crafts, and material design). Through discussions, this diverse group of participants arrived at shared conclusions. Participants highlighted the importance and timeliness of the topic. Many emphasized the need to establish a clear vision of the relationship between the body and materials for HCI. They foregrounded the challenges posed by the complexities of reconciling detailed fine-grain descriptions with a holistic perspective of the overall experience, which arise both from the dynamic nature of the experience of materials, conveyed through sensorimotor feedback, and the continuous interpretation of the material qualities involved in the act of touching. Understanding the underlying processes leading to these interpretations and how awareness levels affect interaction was perceived by participants as critical. Several participants stressed the importance of incorporating first-person perspectives into design.

The discussion spotlighted additional challenges stemming from language limitations and the necessity of cultivating a language specific to touch. Two key points emerged from this discussion. First, participants underscored the importance of establishing a shared vocabulary that enables effective communication among researchers who may have diverse backgrounds. Second, it was considered that in the translation of touch experiences into verbal descriptions significant information tends to be lost. Participants also explored the optimal data format for representing body-based research, acknowledging the existence of ongoing attempts.

Finally, the topic of shifting agency between the body and materials was explored. Two approaches were highlighted: empowering individuals to create their own unique material experiences as part of the design process, and the concept of “controlling the body to experience something: where the body acts as an actuator. The tension between enabling individuals to engage with the experience and exerting control over the body for a specific experience was also acknowledged during the discussion.

For those interested in working in this novel design space at the intersection of body x materials, we synthesize three key takeaways from our workshop. These takeaways emphasize: 1) the significance of comprehending the fundamental processes underlying experiences within this intersection, 2) the need to employ appropriate methods to achieve this understanding, including the integration of first-person perspectives into the design process, and 3) the importance of establishing a shared vocabulary. The latter includes facilitating the translation and representation of experiences and research outcomes related to the body x material interactions.

Conclusions

Everyone agrees that mapping the state-of-the art of the body x materials space is necessary for creating a more significant impact beyond lab experimentation. Nevertheless, a cautionary note was also struck—participants noted that it is essential to slow down and account for the ethical implications of how this research affects people psychologically and otherwise. To move the field forward and generate impact, participants agreed that interdisciplinary conversations must be fostered and the real-life implications of materiality x body understood, both in terms of possibilities and positive and negative effects of our work. The atmosphere at the workshop was open and interdisciplinary, and it was clear among participants that more such interdisciplinary conversations around the real-life implications of body x materials need to be nurtured to bring a shared understanding to the field. With this aim, the workshop participants planned to consolidate existing materials and findings at future workshops, including one that was run at the IEEE World Haptics 2023 conference and attempt to have a repeat workshop at CHI 2024 to further explore the directions that emerged. The long-term goal is to build an interdisciplinary community and open the design space for material-enabled, body-based multisensory experiences by integrating research from various perspectives.

Acknowledgments

We would like to thank all workshop organizers (the authors of this piece were joined by: Hasti Seifi, Judith Ley-Flores, Nadia Bianchi-Berthouze, Marianna Obrist and Sharon Baurley), guest speakers, and participants for their fantastic contributions. We acknowledge funding by: the Spanish Agencia Estatal de Investigación (PID2019-105579RB-I00/AEI/10.13039/501100011033) and the European Research (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No 101002711). The work of Bruna Petreca and Ricardo O’Nascimento was funded by UKRI grant EP/V011766/1. For the purpose of open access, the authors have applied a Creative Commons Attribution (CC BY) license to any Author Accepted Manuscript version arising.

Endnotes

1. Petreca, B.B. et al. Body x materials: A workshop exploring the role of material-enabled body-based multisensory experiences. Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems. ACM, New York, 2023; https://doi.org/10.1145/354454...

2. Giaccardi, E. and Karana, E. Foundations of materials experience: An approach for HCI. Proc. of the 33rd Annual ACM Conference on Human Factors in Computing Systems. ACM, New York, 2015, 2447–2456; https://doi.org/10.1145/270212...

3. Höök, K. Designing with the Body: Somaesthetic Interaction Design. MIT Press, Cambridge, MA, 2018.

4. Ishii, H. and Ullmer, B. March. Tangible bits: Towards seamless interfaces between people, bits and atoms. Proc. of the ACM SIGCHI Conference on Human factors in Computing Systems. ACM, New York, 1997, 234–241.

5. Wiberg, M. The Materiality of Interaction: Notes on the Materials of Interaction Design. MIT Press, Cambridge, MA, 2018.

6. Wilde, D., Vallgarda, A., and Tomico, O. Embodied design ideation methods: Analysing the power of estrangement. Proc. the 2017 CHI Conference on Human Factors in Computing Systems. ACM, New York, 2017, 5158–5170; https://doi.org/10.1145/302545...

Posted in:

on Wed, January 03, 2024 - 2:31:00

Ana Tajadura-Jiménez

Ana Tajadura-Jiménez is an associate professor at the DEI Interactive Systems Group, Universidad Carlos III de Madrid. She leads the i_mBODY lab (

https://www.imbodylab.com) focused on interactive, multisensory, body-centered experiences, at the intersection between the fields of HCI and neuroscience. She is principal investigator of the MagicOutFit and the BODYinTRANSIT projects.

[email protected]

View All Ana Tajadura-Jiménez 's Posts

Bruna Petreca

Bruna Petreca is a senior research fellow in human experience and materials at the Materials Science Research Centre of the Royal College of Art. She co-leads the Consumer Experience Research Strand of the UKRI Interdisciplinary Textile Circularity Centre and is a co-investigator on the project EPSRC Consumer Experience Digital Tools for Dematerialisation.

[email protected]

View All Bruna Petreca's Posts

Laia Turmo Vidal

Laia Turmo Vidal is a Ph.D. candidate in interaction design and HCI at Uppsala University, Sweden. In her research, she investigates how to support movement teaching and learning through interactive technology. Her research interests include embodied design, cooperative social computing, and play.

[email protected]

View All Laia Turmo Vidal's Posts

Ricardo O'Nascimento

Ricardo O'Nascimento is a postdoctoral researcher in human experience and materials at the Material Science Research Centre of the Royal College of Art. His research explores how new technologies challenge and enhance human perception with focus on on-body interfaces and hybrid environments.

ricardo.o'[email protected]

View All Ricardo O'Nascimento 's Posts

Aneesha Singh

Aneesha Singh is an associate professor in human-computer interaction at UCL Interaction Centre. She is interested in the design, adoption, and use of personal health and well-being technologies in everyday contexts, focusing on sensitive and stigmatized conditions. Her research areas include digital health, ubiquitous computing, multisensory feedback, and wearable technology.

[email protected]

View All Aneesha Singh's Posts

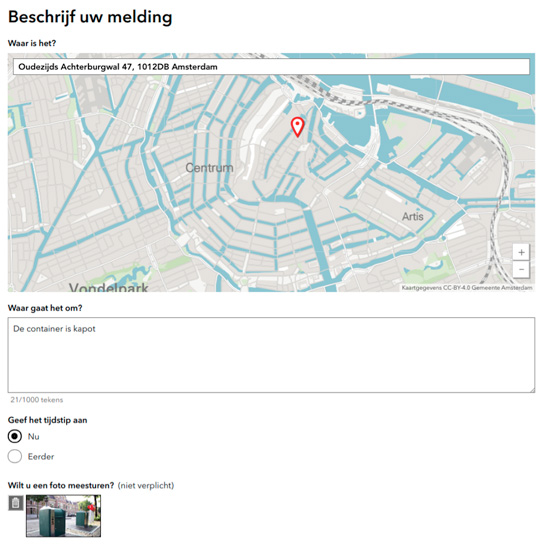

Usability: The essential ingredient for sustainable world development

Authors:

Elizabeth Rosenzweig,

Amanda Davis,

Zhengjie Liu,

Deborah Bosley

Posted: Tue, January 02, 2024 - 11:29:00

As we make interacting with technology more like interacting with human beings, it is important that we understand how people use technology.…That is why usability is so important.

— Bill Gates [1]

Professionals in user experience (UX) and technology recognize the significant impact well-designed technology has on everyday life. In a digital age where interfaces are everywhere, ensuring a positive user experience is crucial. It’s not merely about creating visually appealing designs; it’s about making technology intuitive, accessible, and genuinely useful for everyone.

We aim to do more than just influence individual applications; we strive to influence policymakers and decision-makers worldwide. Our advocacy revolves around recognizing usability as a fundamental human right. We firmly believe that access to technology that is easy to use and understand should be a universal entitlement, akin to essentials like clean water and education.

Our vision is to create a world where technology becomes a tool for empowerment, enabling individuals to navigate the digital landscape with confidence and ease. By championing usability as a basic human right, we’re paving the way for a future where innovative solutions are not only cutting edge but also universally accessible. Our goal is to ensure technology becomes a catalyst for positive change in the lives of people across the globe.

To achieve this vision, we emphasize the significance of World Usability Day (WUD), a pivotal event promoting the values of usability and user-centered design. By enhancing the visibility of this initiative, we can raise not only professionals’ awareness but also that of the general public. WUD serves as a platform to showcase inventive ideas, best practices, and research findings, highlighting how usability directly affects people’s lives. It embodies a global commitment to enhancing user experiences and ensuring technology is accessible to everyone. This influential event transcends geographical boundaries, uniting diverse communities, from professionals and industrial experts to educators, citizens, and government representatives.

The fundamental objective of WUD is both profound and straightforward: to ensure that essential services and products, vital to everyone’s lives, are user friendly and straightforward. By advocating for usability, WUD ensures that technological advancements don’t isolate users with complexity but empower them, regardless of their background, expertise, or physical abilities.

History of World Usability Day

The first invited essay about World Usability Day was in August 2006; the message still rings true today: “Every citizen on our planet deserves the right to usable products and services. It is time we reframe our work and look at a bigger global picture” [2].

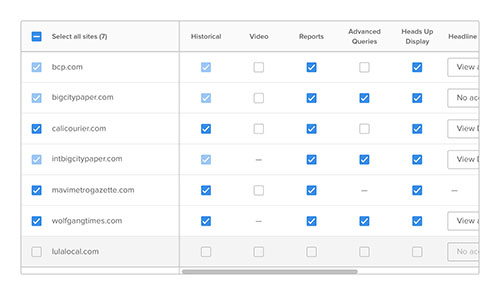

For nearly two decades, WUD has been a catalyst for change, reaching practitioners in every corner of the globe. Across more than 140 countries, WUD has engaged over 250,000 individuals and had an impact on their local communities (see Table 1). WUD has opened up the field of UX and usability in places where it did not exist before the event, such as Eastern Europe (including Poland, Ukraine, Russia, and Turkey). With its annual theme, practitioners and volunteers have come together across the globe to tackle topics that matter to humanity.

| Theme

| Events

| Countries

| Individuals Engaged

|

2005

| Make it Easy

| 115

| 35

| 6,500

|

2006

| Accessibility

| 228

| 40

| 38,000

|

2007

| Healthcare

| 157

| 41

| 29,000

|

2008

| Transportation

| 161

| 43

| 50,000

|

2009

| Designing for a Sustainable World

| 150

| 43

| 48,000

|

2010

| Communication

| 150

| 40

| 45,000

|

2011

| Education: Designing for Social Change

| 120

| 42

| 7,000

|

2012

| Usability of Financial Systems

| 80

| 20

| 6,000

|

2013

| Healthcare: Collaborating for Better Systems

| 107

| 32

| 7,000

|

2014

| Engagement

| 135

| 40

| 8,500

|

2015

| Innovation

| 91

| 31

| 6,500

|

2016

| Sustainability

| 73

| 24

| 6,000

|

2017

| Inclusion

| 66

| 25

| 6,500

|

2018

| Design for Good or Evil

| 62

| 26

| 6,500

|

2019

| Design for the Future We Want

| 56

| 27

| 6,500

|

2020

| Human-Centered AI

| 42

| 26

| 5,500

|

2021

| Trust, Ethics, Integrity

| 47

| 25

| 4,550

|

2022

| Healthcare

| 32

| 25

| 4,550

|

2023

| Collaboration and Cooperation

| 48

| 20

| 4575

|

Table 1. Themes and global WUD participation.

World Usability Initiative

The founders of World Usability Day want to mobilize people in countries around the world to develop technology that works for the greater good. We want to expand the reach of recognizing the significance of usability once per year so that we can have a larger impact in tackling the world’s biggest problems.

To achieve this goal, they collaborated with renowned professional associations like SIGCHI, HCII, PLAIN, and IFIP. Together, they established the World Usability Initiative (WUI), a focused global organization.

Operating as a singular, dedicated entity, WUI works closely with the United Nations, particularly concerning the human factors integral to the 17 Sustainable Development Goals. This initiative unites experts from various fields, including human-computer interaction, user experience, and interaction design. Their collective efforts are geared toward leading researchers, developers, and countries in creating technology that is not only user centered but also aligned with core human values.

Our mission is to partner with the UN to tackle the 17 Sustainable Development Goals for 2030. Our initial initiatives will focus on:

- Establishing World Usability Day as an internationally observed day: WUI aims to connect the HCI-UX field with the United Nations by listing WUD on the UN calendar, ensuring global recognition.

- Rewarding good design with the WUI Design Challenge: This initiative rewards exceptional design by professionals across five countries each year, promoting user-centered innovation.

- Connecting communities through the International Speaker Series: To foster community engagement, WUI hosts an international speaker series, providing a platform for professionals to connect and share ideas.

- Creating projects that achieve the UN Sustainable Development Goals: Create projects within developed and developing countries that connect with the UN Sustainable Development Goals, adopting a user-centered approach.

- Creating a UN-based World Usability Organization: From the perspective of public policy formulation and implementation, the organization will focus on ensuring technology products and services will be made accessible and usable for everyone.

Returning to that 2006 essay about World Usability Day: “The challenge of World Usability Day is not small; it is to change the way the world is developing and using technology” [2].

Your support is instrumental in achieving WUI’s objectives and transforming the world into a more inclusive, equitable place. You can actively contribute by:

- Participating in the WUI Design Challenge: Showcase your innovative designs, promoting user-centered solutions.

- Organizing a World Usability Day event: Contribute to the global conversation by hosting an event, fostering awareness and inclusivity.

- Engaging in the speaker series: Share your expertise and ideas, connecting with professionals worldwide.

- Signing the petition: Join the collective voice urging the UN to recognize World Usability Day’s significance.

- Sponsoring WUD: Support WUD financially, enabling the movement to reach new horizons.

In the spirit of global collaboration, let us unite to create technology that serves humanity inclusively, ensuring usability becomes a universal reality. Together, we can pave the way for a world where technology truly becomes a force for good, enriching lives, fostering innovation, and championing inclusivity.

Endnotes

1. Bill Gates at World Usability Day 2007. YouTube;https://www.youtube.com/watch?v=mpxYYz1QHqQ&t=7s

2. Rosenzweig, E. World Usability Day: A challenge for everyone. Journal of User Experience 1, 4 (2006), 151–155; https://uxpajournal.org/world-...

Posted in:

on Tue, January 02, 2024 - 11:29:00

Elizabeth Rosenzweig

Elizabeth Rosenzweig is a design researcher who uses technology to make the world a better place. She believes that the best design comes from good research through user-centered design, and as the founder of World Usability Day has been able to push the boundaries of the status quo. She holds four patents on intelligent design for image management and is the author of Successful User Experience: Strategies and Roadmaps.

[email protected]

Website:

https://designresearchforgood.org/

View All Elizabeth Rosenzweig's Posts

Amanda Davis

Amanda Davis, founder of and lead consultant at Experiment Zone, excels in guiding companies on usability and conversion rate optimization. As an active board member of the World Usability Initiative, she champions initiatives for global user-friendly experiences, embodying expertise at the forefront of user-centric design and advocacy.

[email protected]

View All Amanda Davis's Posts

Zhengjie Liu

Zhengjie Liu is professor emeritus of HCI/UXD at Dalian Maritime University in China. He is an HCI pioneer who has been working in the field since 1989, and especially has helped the development of UXD practice in industry in China. He has served international communities in various committees, including for ACM SIGCHI, IFIP TC.13 Committee on HCI and ISO WGs for HCI standards, focusing on promoting HCI in developing worlds. He is awardee of ACM SIGCHI Lifetime Service Award in 2017 and IFIP TC.13 Pioneers Award in 2013.

[email protected]

View All Zhengjie Liu's Posts

Deborah Bosley

Deborah S. Bosley is the founder and principal of The Plain Language Group, LLC. For the past 20 years, she has worked with Fortune 500 companies, government agencies, and non-profits to make complex content easy to understand. She provides training, plain language revisions, usability testing, and expert witness testimony.

[email protected]

View All Deborah Bosley's Posts

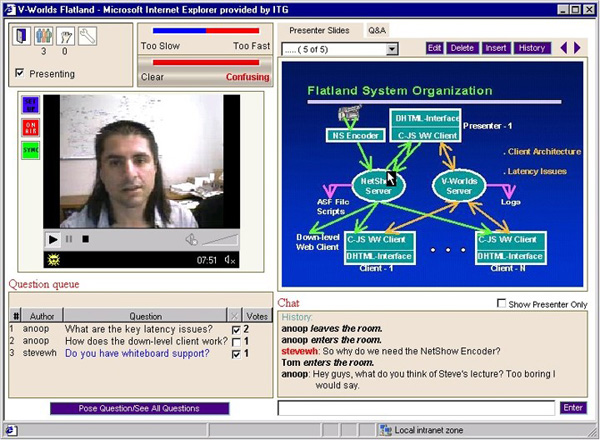

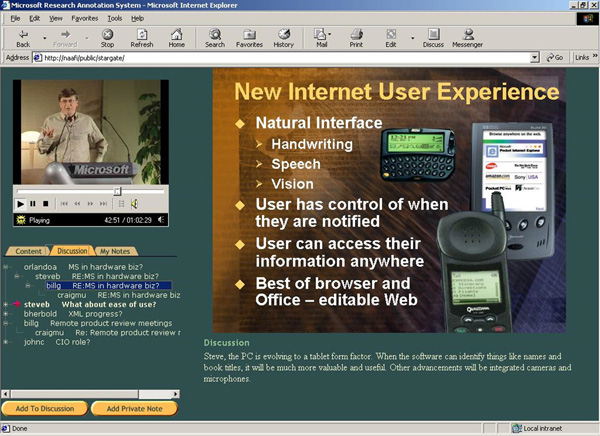

Democratizing game authoring: A key to inclusive freedom of expression in the metaverse

Authors:

Amir Reza Asadi

Posted: Thu, November 16, 2023 - 2:03:00

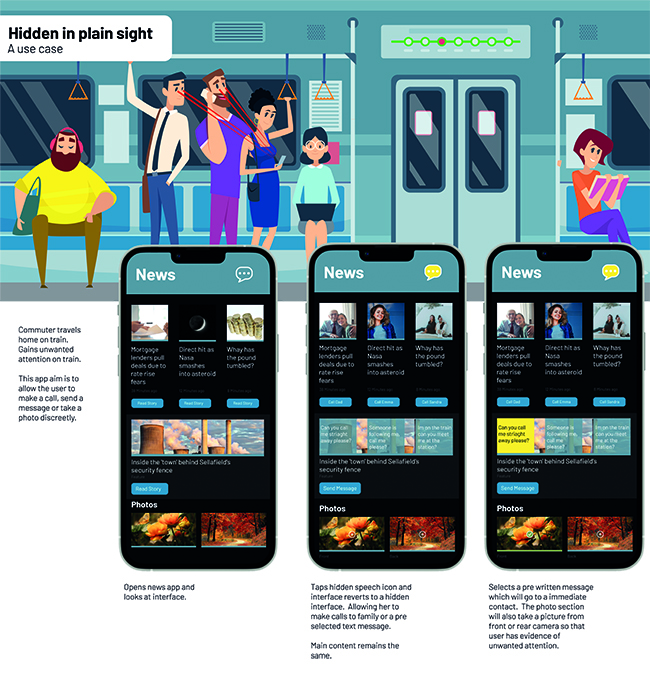

As we approach the metaverse, a sociotechnical future where people consume huge amounts of information in 3D formats, the significance of game development tools, including both game-engine and digital-content-creation tools, will become more and more evident. In a metaverse-oriented world, you need to create 3D experiences to express your ideas. Game engines are currently the main tools for authoring and creating AR/VR experiences; therefore, simplifying the game-authoring process is crucial for the future of freedom of expression.

Video games are the most advanced form of interactive media, conveying diverse ideas and often representing mainstream ideologies. However, making a small but sophisticated video game is an expensive form of storytelling. This issue may not be a concern for many of us because the main purpose of digital play is entertainment. But it should be a vital issue for all of us, as a metaverse-oriented world is made up of 3D game-like experiences. Those who can create more immersive experiences may take control of real-world narratives, so it’s a challenge and an important goal for us to make game development inclusive of more voices. Without simplifying the game-authoring process, our society could lose its freedom to effectively communicate and express itself, resulting in reduced empathy.

We should also not underestimate the threat of propaganda in the metaverse. The metaverse allows users to immerse themselves in dreams of virtual prosperity, and people may shelter in the virtual world to overcome the pain of poverty in the real world. Dreaming has played a vital role in the history of humanity. We dream at times when we are unhappy with our circumstances, and no dictatorship in history has been able to control our dreams [1]. With the rise of emerging non-democratic powers and economies, there is a threat that companies will start changing or censoring the ideologies of their game-alike experiences, similar to what happened to the movie industry in recent years [2,3]. In a dystopian scenario, people will trade their ability to dream for the ability to live in virtual game-alike experiences. Losing the ability to dream freely can have dangerous consequences, as it can hold people captive to mainstream ideas. But there is a silver lining. Transforming game-authoring tools in the same way that emergent video making/sharing apps such as TikTok, Snapchat, and Apple Clips have democratized video-based storytelling can give us hope that all ideas can be expressed not just freely but also effectively in the Metaverse.

A Desirable Future for Game-Creation Tools

In today’s world, game development has become simpler with free-to-use game engines, but the pipeline of game development is still complicated. We need to simplify the workflow of game-authoring tools for tomorrow. I’m not talking about a magic-wand solution here. The technologies for simplifying game authoring are available today, but we need to first let go of our previous assumptions.

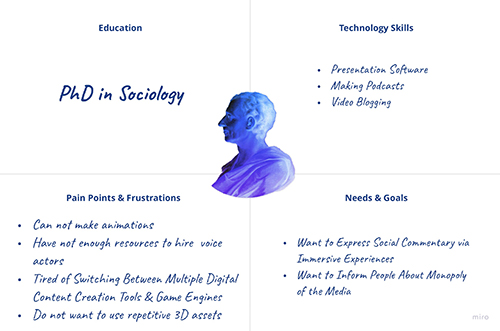

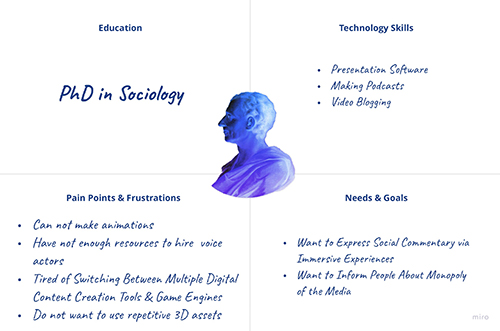

The designers of game-authoring tools should create these tools based on new personas. They need to think big and assume that the intellectuals of the metaverse generation will be game authors rather than book authors. Based on this desirable future, we’ve created a persona (Figure 1) who represents a thinker, representing him with an image of Montesquieu, the famous French political philosopher. We also created a design fiction about his life:

DJ Montesquieu was a political philosopher who sought to challenge traditional modes of philosophical discourse. He saw the world through a different lens, one that viewed technology and innovation as a means of disseminating his ideas more effectively to a wider audience. DJ Montesquieu’s unique approach to philosophical discourse led him to develop his thesis not in the form of a book, but rather as a video game. His thesis was a video game that allows players to experience the impacts of overregulation from the eyes of ordinary citizens. Unlike traditional philosophers, Montesquieu was not interested in writing for the sake of writing. He was more concerned with sharing his views in a way that was accessible and engaging for people. To this end, he utilized the AlternateGameEngine, which is a combination of no-code tools and ChatGPT-based tools to create immersive AR/VR games that brought his ideas to life.

DJ Montesquieu’s games were not just entertaining; they were also thought-provoking. They allowed players to explore complex philosophical concepts in a way that was both fun and enlightening. By using technology as a medium for philosophical discourse, DJ Montesquieu was able to reach a wider audience than he ever could have with traditional writing.

Figure 1. The persona of a future political philosopher, DJ Montesquieu.

In other words, imagine a future where intellectuals and authors, instead of using word processing tools and presentation software, will be using 3D game-creation tools. Where people may quote game authors instead of Friedrich Nietzsche, and where famous people will create games instead of autobiographies. In this future, the most popular social network allows users to turn their diaries into snack-sized gaming experiences that can be experienced by others.

This future also allows society to understand situations that they cannot or do not want to comprehend by reading books. The combination of the immersive nature of the metaverse with simplified game-authoring tools will enable societies to have more ethnocultural empathy, as they can experience life from others’ point of view. Immersive 3D virtual environments have already proven effective in creating empathy among different ethnic groups [4,5], so simplifying the pipeline of immersive storytelling can create opportunities to have a more united society. Imagine that instead of watching partisan debates on TV and being exposed to online political bots, every person could share their ideas, trauma, or values via immersive experiences in the same way they can create content for social media, allowing us to live in a more empathetic community. People may not be able to express their own side of story through verbal interactions, but easy immersive-experience authoring may allow them to express their stories like livable dreams that others can experience themselves.

Endnotes

1. Rare 1997 interview with Abbas Kiarostami, conducted by Iranian film scholar Jamsheed Akrami. From Taste of Cherry Featurettes, 1997. YouTube, Mar. 1, 2021; [https: www.youtube.com="" watch?v="VTcm4P5qAF8"]

2. DeLisle, J. Foreign policy through other means: Hard power, soft power, and China’s turn to political warfare to influence the United States. Orbis 64, 2 (2020), 174–206.

3. Su, W. From visual pleasure to global imagination: Chinese youth’s reception of Hollywood films. Asian Journal of Communication 31, 6 (2021), 520–535.

4. Coffey, A.J., Kamhawi, R., Fishwick, P., and Henderson, J. The efficacy of an immersive 3D virtual versus 2D web environment in intercultural sensitivity acquisition. Educational Technology Research and Development 65 (2017), 455–479.

5. Lamendola, W. and Krysik, J. Cultivating counter space: Evoking empathy through simulated gameplay. ETC Press, 2022.

Posted in:

on Thu, November 16, 2023 - 2:03:00

Amir Reza Asadi

Psychological privacy: How perceptual publicity can support perceived publicity

Authors:

Wee Kiat Lau,

Lisa Valentina Eberhardt ,

Marian Sauter,

Anke Huckauf

Posted: Mon, October 23, 2023 - 10:45:00

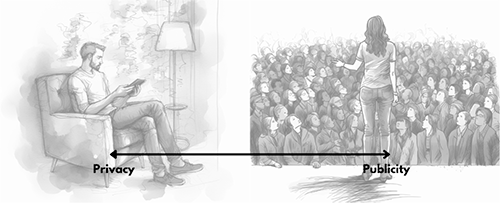

Picture this: Sitting in his kitchen, young Tim chuckles at the recent misfortune of neighbors who fell prey to burglars. At the same time, he’s enthusiastically experimenting with a banking app on his fresh-off-the-assembly-line computer, a machine devoid of even the most fundamental antivirus protection. This scenario is a striking illustration of the privacy paradox. We voice anxiety about our data’s usage, often lambasting corporations with lax privacy protocols, only to defy our apprehensions by not embracing measures to safeguard ourselves. Let’s dissect this intriguingly paradoxical user behavior from the lens of perceptual psychology, illuminating the circumstances under which we sense privacy or publicity, distinguishing these states, and suggesting ways to aid individuals like Tim.

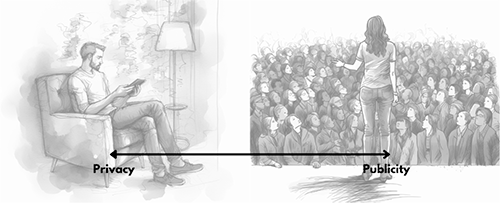

Perception in Private and Public Surroundings

Envision an intensely private moment: reclining on your sofa, shaking off the weariness of work. Now, contrast this with a public spectacle: accepting accolades onstage for special achievements, with a massive audience bearing witness. How do these scenarios make you feel, and what sets them apart? To shed light on this question, we will go deep into our bodies’ states and processes. A key environmental distinction arises when we feel private—the high likelihood of being cocooned in a familiar setting, surrounded by well-known objects and people, which lets us unwind. New experiences, however, kick-start our alertness; they ignite our curiosity and grab our attention.

Fundamental cognitive processes in any living organism include sensation and perception. Familiar environments envelop us with recognizable items, triggering well-known sensations, be they sounds, scents, tactile sensations, or visuals. Therefore, private settings pose fewer challenges to the perceptual system about object identification and sensory memory. This reduction in demand allows the system to function at a lower sensitivity level, freeing up capacity for other operations.

This perceptual mode is accompanied by physiological processes. Broadly speaking, in relaxed, private settings, the parasympathetic neural activation (“rest and digest” mode) is dominant, whereas the sympathetic activation (“fight or flight” mode) is dominant in unfamiliar public settings. There are some remarkable perceptual effects caused by this: Sympathetic activation leads to larger pupils, resulting in more light input, in a slightly extended visual field, and in reduced visual acuity, especially outside of the eyes’ focus—that is, in periphery and in depth [1]. We therefore can assume that in public relative to private settings, we perceive with less spatial accuracy from a larger visual field.

Attention in Private and Public Surroundings

Perception is accompanied by an adaptation of attentional processes. All attentional functions respond to situational affordances. We can differentiate between alertness and selective attention. Alertness is the increase and maintenance of response readiness. It can be supposed to complement the general arousal level of an organism. Thus, alertness will be high in public settings, while it can be reduced when the organism is surrounded by familiar objects. The notion of a broader visual field, although with lower spatial resolution, can be plausibly assumed to support alerting functions in public settings.

Regarding selective attention, new salient stimuli are known to capture attention. This improves visual search performance. In private surroundings, distracting objects can be quickly identified and thus be effectively suppressed. This can lead to a phenomenon known as inattentional blindness: In familiar settings, it frequently happens that we miss even uncommon, unexpected objects. The visual system is also capable of suppressing distracters based on their spatial location [2], improving efficiency in familiar environments. The familiarity of surrounding objects eases not only the selection of task-relevant objects but also the suppression of distracting objects. Inhibition again saves capacity for other processes [3]. In unfamiliar public settings, however, stimuli must be processed until they are identified as harmless, and suppression of task-irrelevant stimuli is thus more difficult.

The dichotomy between private and public settings even manifests in our posture and movements. Onstage, we present our bodies to a large audience, making exaggerated, sweeping gestures. Conversely, in private, our muscles can relax, leading to smaller, more restrained movements. This difference extends to eye movements and gaze, which in turn influence perception.

Level of Control in Private and Public Surroundings

Taken together, private settings lessen the need to attend to external stimuli; you can unwind and rely on the consistency of the surroundings. Also, perceiving things provides already familiar information. All these processes diminish the need for cognitive control, allowing processing to occur more subconsciously. Consequently, executing learned skills, routines, and habits becomes more probable. Public behavior, however, is marked by unfamiliar surroundings. The influx of novel objects or people prompts a slew of questions: Is that unfamiliar face a threat? What does that unexpected sound imply? This cognitive appraisal demands effortful attention, sapping mental resources, making us more cautious in and conscious of our actions [3].

This thinking aligns with Daniel Kahneman’s [4] idea that human behavior is regulated either by quick, instinctive, and emotional processing (as in private settings) or by slower, more deliberative, and logical processing (common in public settings). Crucially, it’s nearly impossible to engage both methods simultaneously. Therefore, in a situation prompting emotional automated processing with only weak conscious monitoring, we’re hardly capable of producing analytical thinking with logical deductions. This means that if users engage with their personal devices at home, their behavior is dominated by automated, nonconscious routines.

Counteracting Perceived Privacy by Simulating a Public Audience

So, how can we assist users in selecting an appropriate level of control? Novelty in environmental stimuli can be an indicator. How we process these cues shapes how we perceive and interact with our surroundings, be they private or public. The cues could be signals or symbols [5]. Signals are automatic cues operating beneath conscious thought, like the familiar ticktock of a clock or the distinctive feel of your sofa, directing our arousal and attention needs. Conversely, symbols, such as GDPR text, demand conscious, detailed analysis, and interpretation, thus requiring higher cognitive capacity.

Discerning the psychological differences between private and public settings equips us with a potent tool to mold privacy behavior and promote prudent disclosure. As we’ve noted, using personal devices in private settings often sparks cues associated with private behavior, possibly leading to a false sense of security. Thus, introducing cues that simulate public scenarios could stimulate public consciousness, reminding users to be more circumspect with their disclosures. These could be visuals, sounds, smells, or other elements that evoke the public nature of their online interactions. One subtle method to induce a feeling of publicity could be the “watching eyes effect”: The presence of a pair of eyes can influence disclosure behavior and can be fine-tuned by varying emotional expression, sex, and age of the eyes [6]. Ideally, this should be achieved by incorporating design elements that subtly disrupt users’ familiar routines, prompting a cognitive response akin to being in a public setting.

Toward a Privacy-Sensitive Future

To conclude, traversing the maze of privacy behavior is an intricate task, yet understanding the interplay of environmental cues, perception, attention, and behavior control can illuminate our path forward. Preserving privacy might be bolstered by subtly simulating publicity within digital environments, evoking vigilance, and awareness akin to our natural responses in public settings. By doing so, we can harness our inherent cognitive and physiological processes.

Endnotes

1. Eberhardt, L.V., Strauch, C., Hartmann, T.S., and Huckauf, A. Increasing pupil size is associated with improved detection performance in the periphery. Attention, Perception, & Psychophysics 84, 1 (2022),138–149; https://doi.org/10.3758/s13414...

2. Sauter, M., Liesefeld, H.R., Zehetleitner, M., and Müller, H.J. Region-based shielding of visual search from salient distractors: Target detection is impaired with same- but not different-dimension distractors. Attention, Perception, & Psychophysics 80, 3 (2018), 622–642; https://link.springer.com/arti...

3. Posner, M.I. and Petersen, S.E. The attention system of the human brain. Annual Review of Neuroscience 13, 1 (1990), 25–42.

4. Kahneman, D. Thinking, Fast and Slow. Farrar, Straus, and Giroux, 2011.

5. Rasmussen, J. Skills, rules, and knowledge; signals, signs, and symbols, and other distinctions in human performance models. IEEE Transactions on Systems, Man, and Cybernetics SMC-13, 3 (1983), 257–266.

6. Lau, W.K., Sauter, M., Bulut, C., Eberhardt, L.V., and Huckauf, A. Revisiting the watching eyes effect: How emotional expressions, sex, and age of watching eyes influence the extent one would make stereotypical statements. Preprint, 2023; https://doi.org/10.21203/rs.3....

Posted in:

on Mon, October 23, 2023 - 10:45:00

Wee Kiat Lau

Lisa Valentina Eberhardt

Marian Sauter

Marian Sauter is a principal investigator in the General Psychology group at Ulm University. He is interested in selective attention and exploratory interactive search. He also works on applied topics such using gaze to predict quiz performance in online learning environments.

[email protected]

View All Marian Sauter's Posts

Anke Huckauf

Anke Huckauf is the chair of General Psychology at Ulm University, specialized in perceptual psychology and human-computer interaction. Currently, she serves as dean of the Faculty of Engineering, Informatics, and Psychology at Ulm University.

[email protected]

View All Anke Huckauf's Posts

Seven heuristics for identifying proper UX instruments and metrics

Authors:

Maximilian Speicher

Posted: Tue, September 19, 2023 - 11:09:00

In the two previous articles of this series, we have first learned that metrics such as conversion rate, average order value, or Net Promoter Score are not suitable to reliably measure user experience (UX) [1]. The second article then explained how UX is a latent variable and, therefore, we must rely on research instruments and corresponding composite indicators (that produce a metric) to measure it [2]. Now, the logical next question is how we can identify those instruments and metrics that do reliably measure UX. This boils down to what is called construct validity and reliability, on which we will give a brief introduction in this final article, before deriving easily applicable heuristics for practitioners and researchers alike who don’t know which UX instrument or metric to choose.

Construct validity refers to the extent to which a test measures what it is supposed to measure [3]. In the case of UX, this means that the instrument or metric should measure the concept of UX as it is understood in the research literature, and not, for example, only usability. One good way to establish construct validity is through factor analysis [3].

Construct reliability refers to the consistency of a test or measure [4]. Put differently, it is a measure of how reproducible the results of an instrument or metric are. A good way to establish construct reliability is through studies that assess the test-retest reliability of the instrument or metric, as well as its internal consistency, such as Cronbach’s alpha [4].

In addition to that, the Joint Research Centre of the European Commission (JRC) provides a “Handbook on Constructing Composite Indicators” [5], which summarizes the proper process in terms of a 10-step checklist. We build on all of the above for our following list of seven heuristics for identifying proper UX instruments and metrics.

Heuristic 1: Is there a paper about it? If there is no paper about the instrument and/or metric in question, there’s barely a chance you’ll be able to answer any of the following questions with yes. So, this should be the first thing to look for. A peer-reviewed paper published in a scientific journal or conference would be the best case, but there should be at the very least some kind of white paper available.

Heuristic 2: Is there a sound theoretical basis? In the case of UX, this means, does the provider of the instrument and/or metric clearly explain their understanding of UX and, therefore, what their construct actually measures? The JRC states: “What is badly defined is likely to be badly measured” [5].

Heuristic 3: Is the choice of items explained in detail? Why were these specific variables of the instrument chosen, and not others? And how do they relate to the theoretical framework, that is, the understanding of UX? The JRC states: “The strengths and weaknesses of composite indicators largely derive from the quality of the underlying variables” [5].

Heuristic 4: Is an evaluation of construct validity reported? This could be reported in terms of, for example, a confirmatory factor analysis [3]. If not, you can’t be sure whether the instrument or metric actually measures what it’s supposed to measure.

Heuristic 5: Is an evaluation of construct reliability reported? This could be reported in terms of, for example, Cronbach’s alpha [4]. If not, you can’t be sure whether the measurements you obtain are proper and reproducible approximations of the actual UX you want to measure.

Heuristic 6: Is the data that’s combined to form the metric properly normalized? This is necessary if the items in an instrument have different units of measurement. The JRC states: “Avoid adding up apples and oranges” [5].

Heuristic 7: Is the weighting of the different factors that form the metric explained? Factors should be weighted according to their importance. “Combining variables with a high degree of correlation” (double counting) should be avoided [5].

In the following, the application of these heuristics will be demonstrated through two very brief case studies.

Case Study 1: UEQ

The User Experience Questionnaire (UEQ) is a popular UX instrument developed at SAP AG.

- H1: There is a peer-reviewed research paper about UEQ, which is available at [6]. ✓

- H2: The paper clearly defines the authors’ understanding of UX, and they elaborate on the theoretical background. ✓

- H3: The paper explains the selection of the item pool and how it relates to the theoretical background. ✓

- H4: The paper describes, in detail, two studies in which the validity of UEQ was investigated. ✓

- H5: The paper reports Cronbach’s alpha for all subscales of the instrument. ✓

- H6: Not applicable, since UEQ doesn’t explicitly define a composite indicator. However, a composite indicator can be constructed from the instrument.

- H7: See H6.

Case Study 2: QX score “for measuring user experience”

This metric was developed by SaaS provider UserZoom and is now provided by UserTesting. It is a composite of two parts: 1) the widely used SUPR-Q instrument and 2) the individual task success rates from the user study where the metric was measured, in a 50/50 proportion.

- H1: There is no research paper, but at least a blog post explaining the instrument and metric. ✓

- H2: There is no clear definition of UX given. The theoretical basis for the metric is the assumption that all existing UX metrics use either only behavioral or only attitudinal data. There is no well-founded explanation given why this is considered problematic. The implicit reasoning is that only by mixing behavioral and attitudinal data can we properly measure UX, which is factually incorrect (cf. [2]). ❌

- H3: The metric mixes attitudinal (SUPR-Q) and behavioral (task success) items, but no well-founded reasoning is given as to why only task success rate was chosen, or why this would improve SUPR-Q, which is already a valid and reliable UX instrument in itself. ❌

- H4: No evaluation of construct validity is reported. ❌

- H5: No evaluation of construct reliability is reported. ❌

- H6: There is no approach to data normalization reported, the metric seemingly adds up apples and oranges. ❌

- H7: There is no reasoning given for the weighting of the attitudinal and behavioral items. ❌

In conclusion, the seven heuristics provided in this article serve as a useful guide for identifying proper UX instruments and metrics. Additionally, by considering construct validity and reliability, as well as following the JRC’s 10-step checklist, practitioners and researchers alike can make informed decisions when choosing a UX instrument or metric. It’s important to note that not all instruments or metrics will pass all of these heuristics, but the more of them that are met, the more confident one can be that the chosen instrument or metric properly measures UX. If in doubt, choose the instrument or metric that checks more boxes. It's worth noting that some heuristics, like H1, are not strictly necessary, and H6 and H7 only apply to composite indicators. It’s also worth noting that there may be valid instruments or metrics that fail some of these heuristics and vice versa. Their goal is to provide a robust and quick framework for evaluating UX instruments and metrics, but ultimately, the best approach will depend on the specific research or design project.

Endnotes

1. Speicher, M. Conversion rate & average order value are not UX metrics. UX Collective. Jan. 2022; https://uxdesign.cc/conversion...

2. Speicher, M. So, How Can We Measure UX? Interactions 30, 1 (2023), 6–7; https://doi.org/10.1145/357096...

3. Kline, R.B. Principles and Practice of Structural Equation Modeling. Guilford Publications, 2015.

4. Cronbach, L.J. and Meehl, P.E. Construct validity in psychological tests. Psychological Bulletin 52, 4 (1955), 281.