Sonic Dancer: A Low-Cost, Sound-Based Device to Explore Shared Movement and Dance Through Generative Live Soundscapes

Issue: XXXI.1 January - February 2024Page: 6

Digital Citation

Authors:

Swen Gaudl, Silvia Carderelli-Gronau

How can we feel the presence of and connection to others in a shared space if they can join remotely but cannot be physically present? For lectures, meetings, and talking with friends, video and phone conferencing technologies exist. However, what happens if you want to be untethered from your screen or if your interaction requires physical movement such as dance practice or understanding where people are in a physical space? With Sonic Dancer, we are exploring how meaningful interaction can be done without using complex vision-based approaches. We started the project with the goal to build a low-cost, easy-to-replicate device for people to engage remotely in synchronized movement to "feel" where somebody is. Vision-based approaches offer precise tracking but are also reliant on more-expensive tech and often complex setups, making them less approachable for less tech-savvy people.

For our project, "feeling" where somebody is in relation to you and how they move through space provides a novel quality, generating not only presence but also connectedness. Thus, stripping any technology down to what is really required and can be replicated easily seemed like an exciting approach.

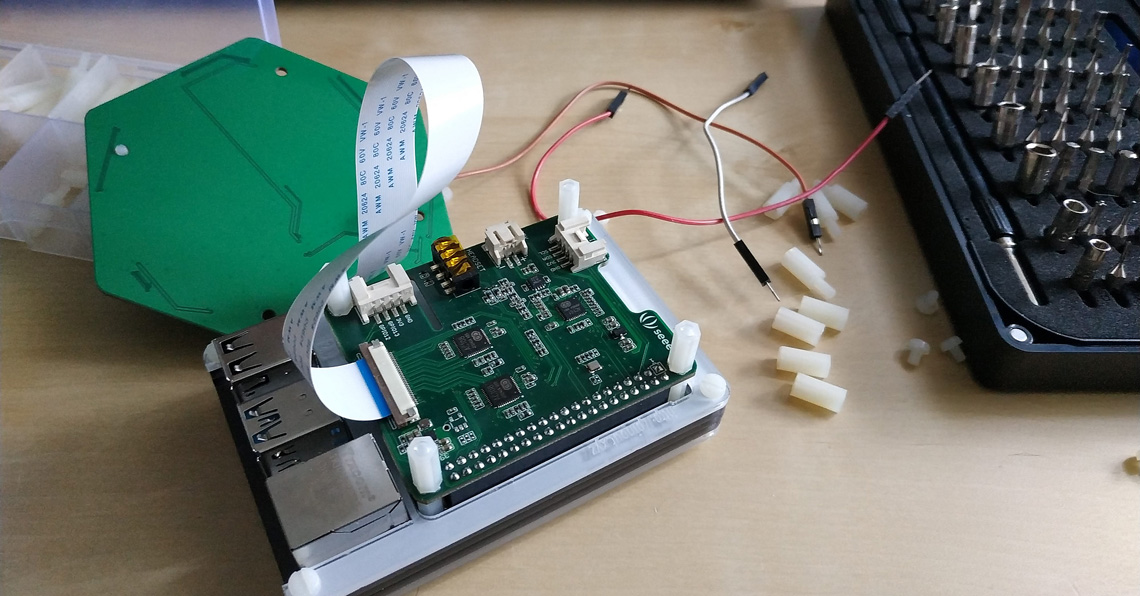

With Sonic Dancer, we wanted to identify the location of people in a space and how they move through that space in relation to a beacon that allows the creation of a virtual anchor or origin. Starting with a Raspberry Pi, a microphone array (Figure 1), and machine-learning techniques to locate sound sources and movement traces, we built a first prototype. The device identifies approximately five to 10 individual movers by recording the sound around the device, processing it, and mapping it to an onion-like object, which we split into eight equal slices, where each sliced-onion layer presents a tonal subspace. Initially, we rendered the potential location of the "other" on a projection, offering an understanding of where people are in relation to the device. This allowed two movers to engage with each other's movement in a "virtual" 3D space. Seeing the other mover provided value, but both movers kept focusing on the projection rather than on their own movement. To move away from a screen, we involved a sound engineer to interpret the located movement, creating a sound representation for each mover that was presented on a speaker array (Figure 2) around them. We added a 3D-printed shell (Figure 3) around the tech so people would not hurt themselves when stepping on the device. It was magical seeing the movers engage more openly with the space and no longer focused on a screen.

| Figure 1. The Raspberry Pi 4 microcomputer functions as the core of the device. The first build had minimal physical user protection and was used for exploring different input devices. |

| Figure 2. The Sonic Dancer device is positioned in the center of a 2-meter circular speaker arrangement with a projector underneath it. |

We are exploring how meaningful interaction can be done without using complex vision-based approaches.

However, this setup was not feasible due to the overly complex and expensive setup. Stripping the tech back again, we switched to Bluetooth speakers and a visual LED compass (Figure 4) on the device, to create a visual reference for movers when they get to know the device (Figure 5), and a computational approach mapping the movement to explorable sound using Pure Data (https://puredata.info/). This means users only need a single device, Bluetooth headphones or a speaker, and a USB-C power cord. The design of the shell had to be iterated, as the Pi was overheating during movement practice. We also switched to a semitransparent PET material (Figure 6), which does not degrade as quickly when exposed to sunlight and is able to withstand being stepped on. As a bonus, it enhanced the visual response of the LEDs (Figure 4). Due to the focus on low-cost components and low power consumption, the entire device can be used in outdoor spaces with a phone battery pack for an hour, so movers are able to explore shared practice in nature without fear of breaking something expensive.

| Figure 4. The current design is still in dev mode with exposed ports for easier debugging through custom colorful plugs. They can be closed to reduce chances of snagging. |

| Figure 5. Experimenting with sounds and different-sized spaces and fine-tuning the sensitivity of the microphones is still ongoing work. Here we experiment in a green-room with heavy curtains. |

Swen Gaudl is a senior lecturer of interaction design in the Department of Applied IT at the University of Gothenburg in Sweden. He researches interaction design, artificial intelligence, behavior modeling, evolutionary computation, digital games, social robotics, and low-cost robotic design by designing and building digital and physical artifacts. [email protected]

Silvia Carderelli-Gronau is a dance artist, researcher, and dance movement therapist interested in somatics, group improvisation, and embodiment. She investigates moving, ensemble improvisation, and multimodal approaches to being with self, others, and the environment. She pursues a dialogue between "pure" physical experiences and experiences with technologies. [email protected]

Copyright held by authors

The Digital Library is published by the Association for Computing Machinery. Copyright © 2024 ACM, Inc.