MaD

Issue: XXII.2 March + April 2015Page: 6

Digital Citation

Authors:

Jules Françoise, Norbert Schnell, Riccardo Borghesi, Frédéric Bevilacqua

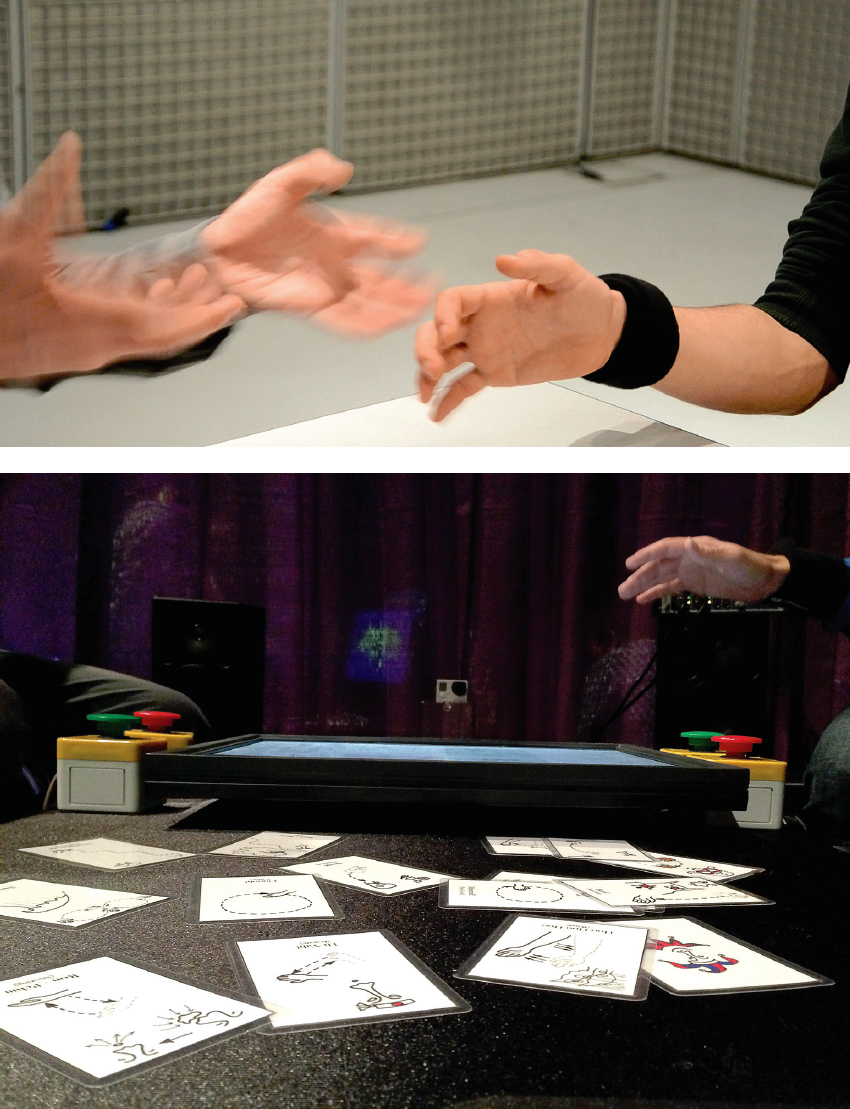

MaD allows for simple and intuitive design of continuous sonic gestural interaction. When movement and sound examples are jointly recorded, the system automatically learns the motion-sound mapping. Our applications focus on using vocal sounds—recorded while performing actions—as the primary material for interaction design. The system integrates probabilistic models with hybrid sound synthesis. Importantly, the system operates independently of motion-sensing devices, and can be used with different sensors such as cameras, contact microphones, and inertial measurement units. Applications include not only performing arts and gaming but also medical applications such as auditory-aided rehabilitation.

http://ismm.ircam.fr/siggraph2014-mad/

SIGGRAPH'14 - MaD: Mapping by Demonstration for Continuous Sonification from Jules Françoise on Vimeo.

Françoise, J., Schnell, N., and Bevilacqua, F. A multimodal probabilistic model for gesture-based control of sound synthesis. Proc. of the 21st ACM International Conference on Multimedia. ACM, New York, 2013, 705–708. DOI:10.1145/2502081.2502184

Françoise, J., Schnell, N., Borghesi, R., and Bevilacqua, F. Probabilistic models for designing motion and sound relationships. Proc. of the 2014 International Conference on New Interfaces for Musical Expression. 2014, 287–292.

Jules Francoise, IRCAM.CNRS.UPMC

[email protected]

Norbert Schnell, IRCAM.CNRS.UPMC

[email protected]

Riccardo Borghesi, IRCAM.CNRS.UPMC

[email protected]

Frederic Bevilacqua, IRCAM.CNRS.UPMC

[email protected]