Authors: Arathi Sethumadhavan, Esra Bakkalbasioglu

Posted: Wed, June 30, 2021 - 12:46:38

The following is a review of recent publications on the issue of AI and surveillance and does not reflect Microsoft's opinion on the topic.

The term surveillance is derived from sur, which means from above, and veillance, which means to watch. Theoretical approaches to surveillance can be traced back to the 18th century with Bentham’s prison-panopticon. The panopticon premise involved a guard in a central tower watching over the inmates. The “omnipresence” of the guard was expected to deter the prisoners from transgression and encourage them to self-discipline. Today, the declining costs of surveillance hardware and software coupled with increased data storage capabilities with cloud computing, have lowered the financial and practical barriers to surveil a large population with ease. As of 2019, there were an estimated 770 million public surveillance cameras around the world, and the number is expected to reach 1 billion this year.

During the Covid-19 pandemic, we have been witnessing a massive global increase in surveillance as well. For example, technologies are used to allow people to check if they have come in contact with other Covid-19 patients, map people's movements, and track whether quarantined individuals have left their homes. While such surveillance technologies can help combat the pandemic, they also introduce the risk of normalizing surveillance.

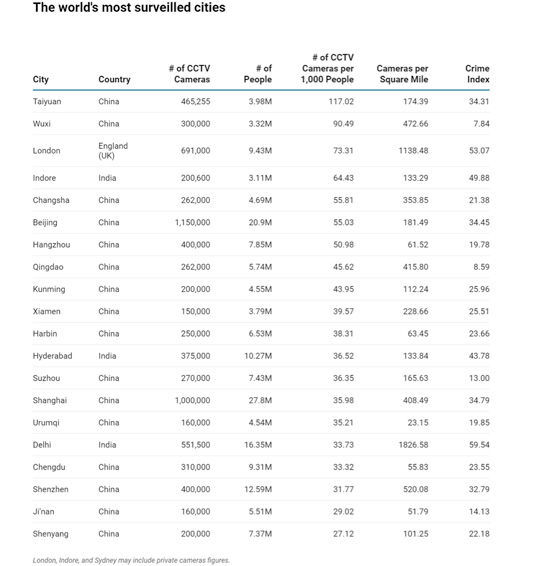

China is home to the top surveilled cities in the world

Of the top 20 most surveilled cites in the world (based on the number of cameras per 1000 people), 16 of them are in China. Outside of China, London and the Indian cities of Indore, Hyderabad, and Delhi are estimated to be the most surveilled cities in the world.

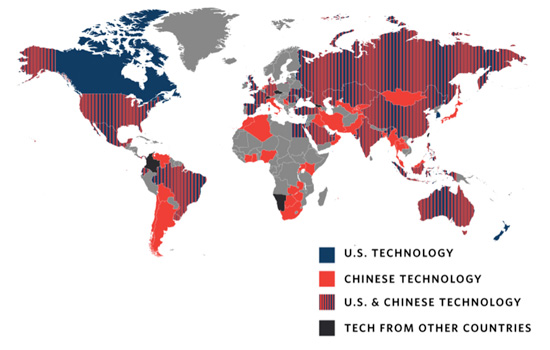

AI-based surveillance technologies are being deployed globally at an unfathomable rate

Surveillance technologies are being deployed at an extremely fast rate globally. In fact, as of 2019, at least 75 countries were using various AI technologies such as smart policing systems, facial recognition, and smart city platforms for surveillance purposes. Every nation has a unique approach to surveillance shaped by its technological landscape and economic power as well as its social, legal, and political systems. As of 2019, China has installed more than 200 million facial recognition-powered cameras for various purposes, ranging from identifying thefts and finding fugitives, spotting jaywalkers, designing targeted advertisements, and detecting inappropriate behaviors in classrooms. In India, the Internet Future Foundation has estimated 42 ongoing facial recognition projects, with at least 19 systems expected to be used by state-level police departments and the National Crime Records Bureau for surveilling Indian citizens. Earlier this year, the Delhi police used facial recognition technology to track down suspects allegedly involved in violent clashes during the farmers’ tractor march in the nation’s capital.

AI Surveillance Technology Origin. Source: Carnegie Endowment For International Peace

Growing deployment in various sectors criticized by civil liberties advocacy groups

The growing reach and affordability have led to increased use of AI-based surveillance technologies in law enforcement, retail stores, schools, and corporate workplaces. Several of these applications are fraught with criticism. For example, several police departments in the U.S are believed to have misused facial recognition systems by relying on erroneous inputs such as celebrity photos, computer-generated faces, or artist sketches. The stakes are too high to rely on unreliable or wrong inputs. Similarly, this year, news of Lucknow police in Northern India wanting to use AI-enabled cameras that can read expressions of distress on women's faces when subjected to harassment was met with backlash, with civil rights advocates noting how it could violate women’s privacy and exacerbate the situation. Further, the scientific basis behind the use of AI to read “distress” was deemed unsound. In the education sector, a few schools in the U.S. are relying on facial recognition systems to identify suspended students and staff members as well as other threats. Civil liberties groups argue that in these scenarios there is a lack of evidence that there is a positive correlation between the use of the technology and the desired outcome (e.g., increased safety, increased productivity). Further, critics also contend that the investigation of petty crimes does not justify the use of surveillance technologies, including the creation of a massive facial recognition database.

Surveillance technologies raise public concerns

Widespread use of new surveillance technologies has posed valid privacy concerns among the general public, in several nations. For example, a survey conducted by the Pew Research Center revealed that more than 80 percent of Americans believe that they have zero-to-very-little control over their personal data collected by companies (81 percent) and government agencies (84 percent). A 2019 survey conducted in Britain showed that the public is willing to accept facial recognition technology when there are clear benefits but want their government to impose restrictions on the use of the technology. Further, the desire to opt-out of the technology was higher for individuals belonging to ethnic minority groups, who were concerned about the unethical use of the technology. These findings demonstrate the need to involve the public early and often in the design of such technologies.

Need for legal frameworks and industry participation to address public concerns

Recently, the European Commission introduced a proposal for a legal framework to regulate the use of AI technologies. As part of this, they have proposed banning the use of real-time remote biometric identification systems by law enforcement, except for a limited number of uses like missing children, terrorist attacks, and serious crimes. While the U.S. does not have federal laws regulating AI surveillance, some cities have taken restrictive measures around the use of such technologies by law enforcement. For example, San Francisco became the first U.S. city to ban the use of facial recognition by local agencies, followed by Somerville, Oakland, Berkeley, and Brookline. A number of companies, including Microsoft, have called for the creation of new rules, including a federal law in the U.S. grounded in human rights, to ensure the responsible use of technologies like facial recognition.

Posted in: on Wed, June 30, 2021 - 12:46:38

Arathi Sethumadhavan

View All Arathi Sethumadhavan 's Posts

Esra Bakkalbasioglu

View All Esra Bakkalbasioglu's Posts

Post Comment

@Cameron Bins (2024 11 07)

The use of AI for surveillance is increasing not only in smart cities but also in areas such as education and policing, but it raises ethical and legal issues. Just like the game Raft Wars, where players need to strategize to protect themselves from threats, in the real world, countries and organizations must also find ways to build reasonable regulations to protect the rights of citizens in the context of increasingly popular surveillance technology.

@carinamon (2024 12 09)

Drive Mad is not just another driving game; it’s a test of skill, patience, and ingenuity. With its unique mechanics and endless replayability, it’s no wonder this game has captured the hearts of gamers worldwide.

@Free AI Tools Free AI Tools (2024 12 16)

Thanks for this article, it is well written Remote Software JobsFree AI Tools

Latest Merch Deals Thank you for sharing\

@anna12 (2025 01 13)

The sprunki phase 3 game not only focuses on creating music but also encourages unlimited creativity and exploration, allowing players to freely express themselves through music without being bound by any rules.

@AI-Powered (2025 06 12)

Artificial intelligence is truly transforming every aspect of our world — from surveillance systems to everyday puzzle games! It’s incredible to see both the opportunities and challenges it brings. By the way, if you’re into smart AI-powered puzzle tools, check out Block Blast Solution — a clever way to tackle tricky puzzles with ease!

@BethanyDamian (2025 06 18)

I’ve seen how artificial intelligence is revolutionizing surveillance by making systems smarter and more proactive. Real-time facial recognition, behavior analysis, business process outsourcing bpo services and automated alerts allow for faster, more accurate responses. It’s not just about monitoring anymore—it’s about predicting and preventing incidents before they escalate. The possibilities and ethical questions are huge.

@drive-mad (2025 06 28)

Your writing sparkles with clarity and depth! Each sentence flows like music, blending insight with elegance. The structure is masterful—ideas unfold with perfect timing. Your unique voice makes even complex topics engaging. Truly a pleasure to read https://drive-mad.run/

@devobv466192021 (2025 06 28)

Morning dew glistens on rose petals as sunlight tiptoes across my bookshelf. The scent of coffee blends with pages turning—a perfect little universe where peace blooms. https://sling-drift.com/

@funnyshooter2.run (2025 06 28)

This article is truly brilliant and insightful! The content is well-researched, engaging, and full of valuable takeaways. I learned so much from reading it and feel inspired. Keep up the fantastic work—your effort shines through. Looking forward to more amazing content https://funnyshooter2.run/