Authors: Chameera De Silva, Thilina Halloluwa

Posted: Tue, May 09, 2023 - 3:06:00

Have you ever played a game against a computer? Have you ever wondered how computers win complex games against well-experienced players, such as when an AI system from IBM defeated Garry Kasparov in the Chinese board game Go?

The fear of AI becoming the biggest threat to humankind, replacing the human workforce on a large scale is a real concern for AI practitioners and businesses. But there have been incidents where the combination of a smart AI system supervised by humans has resulted in unbelievable achievements. Technology has developed magnificently in the past decade, and the widespread adoption of smart systems powered by AI predicts a much smarter and tech-dependent world. New-age AI systems will become more and more learning-based. The massive data generated by the Internet will be used to train these models and systems to improve their intelligence in specifically focused areas.

The current focus of AI developers is to make the algorithms better and optimize the performance of the AI models as much as possible. We’re heading toward Web 3.0; its adoption will be much faster than that of Web 1.0 and Web 2.0 and driven by the adoption of AI.

The super-fast development and integration of AI tools into the products and services we use is accelerating the pace of our lives. Alongside this development is the fear that AI will exceed human cognitive intelligence, creating a struggle to maintain our control over them.

This fear of AI replacing humankind is the motivation for human-centered artificial intelligence (HCAI), where at one end we have AI and at the other end human beings supervising the actions and results of AI. The development of this technology has happened intuitively and on multiple levels.

In the early days of the Web, Web 1.0, we could only read the content online. The public had no control of over what they wanted to read or consume, and we could not search for things; it was just one-way communication. This motivated the creation of Web 2.0, which gave us social media applications like Facebook, Youtube, and Google. This made the communication on the Web two-way. We were now able to decide what content we wanted to see. We could not only share our views as comments but also could now like or dislike posts. Now, as we are involving and interacting with technology more and more, we are finding more ways to incorporate technology into our lives, and one way of doing it is by developing AI applications.

Artificial Intelligence

Humans are constantly working on creating machine intelligence similar to that of human intelligence; this is called artificial intelligence. AI is one way we are using technology and creating applications and systems that are automated and can work for us without any supervision. Self-driving cars, lie detector machines, and robots that can do surgery are just some examples of automations made possible by artificial intelligence. Current research is targeting advances in many sectors, including finance, education, and transportation.

These advances mean incorporating AI into our work processes at higher levels. This view of involving AI into human life is a matter of great concern today, often creating fear of AI and pitting technology against humankind.

What Is the Fear?

Machines are no doubt very fast and accurate in calculating numbers and analyzing large numbers of scenarios and methods within minutes. This speed and accuracy is very helpful to humans, but relying on machines completely just because of their speed and accuracy is a bad idea. Machine intelligence is smarter than human intelligence in the areas in which the machine is trained—but it cannot be trusted blindly.

The ethical and safety implications of learning-based AI systems have proven these systems to be unsafe in many ways. The decisions and results provided by these systems are not guaranteed to be fair and have also been shown to be not perfectly explainable. That is, the current models are black-box models for us humans, as they do not provide reasons for the results given by the machine.

Real-world scenarios cannot work on ambiguity and ethically unsafe standards.

AI is making machines intelligent, but we cannot be guaranteed of the safety of these systems in any specific way unless they are extremely constrained.

What Are the Challenges?

There are several challenges with AI machines today. The results given by AI systems are trusted because they are provided by computers. But the explanation of these results is very important as we scale up the use cases and implementation of these smart systems. The current solution to this challenge is explainable artificial intelligence. Explainable AI adds a level of transparency in the decision and processing done by AI systems. But as we incorporate AI systems in sectors like education, medicine, and finance, explainability is not enough.

AI in the education sector

The systems involved in the education sector will be assigned to grade students’ papers, mark their attendance, and look for any possibilities of cheating during examinations. The results given by the systems in this area impact the academics of the students directly; therefore, it is crucial to ensure that the decisions are fair and grounded on the actual rules of the real world. To achieve this level of trust with machines, human supervision is necessary.

AI in the medical sector

Likewise, the involvement of AI systems in the medical sector is opening new doors of advancement in medicine. Robots are performing surgeries, as well as recommending medications to patients on the basis of their symptoms.

The recommendations of these system are highly likely to directly impact the patients’ health and life; therefore, it is extremely important that the recommendations are 100 percent correct. But here, due to the subjective nature of the patients’ symptoms, the chances of machines making a mistake is very high. For example, there could be a case where the patient is feeling chest pain due to low blood pressure, but the machine detects it as a heart attack. These kinds of mistakes can cost lives and thus there must be zero-tolerance for them.

The challenge

Because of the lower cognitive and emotional intelligence of machines, it is necessary to incorporate smart systems alongside humans and not in place of humans. The desired advancement can be achieved only when humans and machines work together.

Human-Centered AI

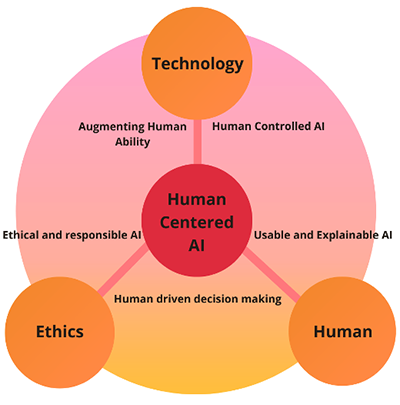

Human-centered AI (HCAI) is AI with human supervision. There are three main pillars of HCAI: humans, ethics, and technology. Human-centered AI is the solution to the fear of AI exceeding human intelligence and thus becoming a threat to humankind.

HCAI will ensure that AI systems are supervised by humans and that the processing and decisions of smart systems are all under the control of a human (Figure 1).

Figure 1. Human-centered AI combines humans, ethics, and technology.

But what exactly is human-centered AI? The basic idea involves the deep integration of humans into the data-annotation process and real-world operation of the system in both the training and the testing phases. HCAI will combine human-driven decision making with AI-powered smart systems, thereby making them smart, fast, and trustable. Ethical and responsible AI systems will become a reality by incorporating AI alongside humans. Augmenting AI systems with human abilities will also bring us usable and explainable AI. This is essentially human-controlled AI, combining the modern power of artificial intelligence with human intelligence.

HCAI will address the challenges of the lower emotional intelligence of machines and the lower speed and accuracy of humans together.

How is HCAI possible?

Current AI systems are the learning bases for AI systems that get trained on data and are then tested on similar datasets to check their accuracy. These datasets are created by humans, and a large amount of time, energy, and money is invested in performing detailed annotations to create a dataset suitable for training an AI model.

The motive of HCAI is to enable an annotation process generated by machines in the form of queries to humans. The machines will decide which data is necessary to be annotated by humans and only that subset of the data will be given to the humans for annotation. This is one way to create human-centered AI.

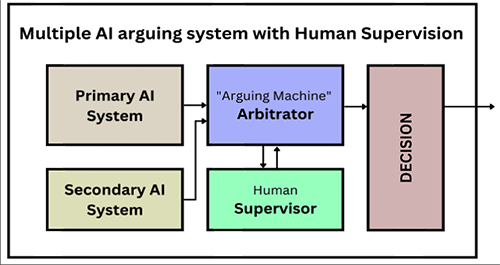

With regard to real-world applications, current systems just deliver the results to us and we receive them. With HCAI, the results given by the AI system will have a degree of uncertainty associated with them from the AI system; this result will then be sent to the human supervisor. When the results get the thumbs up from the human supervisor, then and only then are they sent as the final result (Figure 2).

Figure 2. Multiple AI systems arguing with human supervision.

This is the basic, ground-level idea of HCAI. It can get much deeper and can exist in many formats.

Algorithms in HCAI

The algorithms of current AI systems are created with the purpose of training the machine-learning models. The algorithms in HCAI will be designed in such a way that they give the AI system an idea of the context of human intelligence, both in the moment and over time. Smart systems will be trained not only on the annotated data mechanically but will also get training on the human understanding of the concept. This will make AI systems even smarter and reduce the risk of unwanted out-of-the-box results.

Human-computer interaction

To create the algorithms that teach the computer the context of human understanding, we need human-computer interaction. To make the interactions with humans successful and fruitful for HCAI purposes, they must be continuous, collaborative, and provide a rich, meaningful experience.

We currently have autonomous vehicles driving more than 100 billion miles on the road in auto-pilot mode, some of them in semi-autopilot mode. These cars and the smart systems installed in them aim to have a rich and fulfilling human-computer interaction experience. The cars have a bunch of super-cool devices monitoring and recording the multiple modes and emotions of the driver and try to give us a better interactive experience. But we are seeking a collaborative, rich interactive experience that goes far beyond this.

The challenge for current smart car systems is that these cars are intended to be used by people from both the younger generation and the older one, who might have never used any AI-based system in their entire life. The real challenge is thus creating HCI that is capable of deeply exploring these aspects and providing a rich, interactive, and collaborative experience.

Safety and ethical awareness

Artificial intelligence or machine intelligence in general brings many concerns around safety and ethics.

Taking the example of self-driving cars, the passengers riding in a car that is in autopilot mode are prone to trust the system, as the system only provides the decisions it is making based on the situation. There is no information given regarding the level of uncertainty, nor any warnings from the system to the driver.

HCAI is a solution for this safety challenge. We can create a system with multiple AI models deciding on a single situation, and whenever a disagreement in the decisions is detected, human supervision is then sought. This is one way of involving human interaction in the decision-making and validation processes.

The other challenge is the ethical awareness of the AI system. There are certain situations in the real world that cannot always be dealt with technically. The ethical aspects must be considered, and this is an area we need to work on to optimize our AI models.

Until AI models are smart enough to understand the ethical and emotional aspects of the real world, they need to be combined with human supervision in the form of HCAI. In addition, some of the current AI use cases, when enabled with stronger and safer HCAI, can make things much more secure for users.

HCAI Today

HCAI designers go beyond thinking of computers as our teammates, collaborators, or partners. They are more likely to develop technologies that dramatically increase human performance by taking advantage of the unique features of computers.

Recommender systems

A current system that is being implemented almost everywhere and is making things autonomous for humans is the recommender system. Widely used in advertising services, social media platforms, and search engines, these systems bring many benefits.

The consequences of mistakes by these systems are subtle, but malicious actors can manipulate them to influence buying habits, change election outcomes, or spread hateful messages and reshape people’s thoughts and attitudes. Thoughtful design that improves user control could increase consumer satisfaction and limit malicious use.

Other applications that enable automation include common user tasks such as spell-checking and search-query completion. These tasks, when carefully designed, preserve user control and avoid annoying disruptions, while offering useful assistance.

Consequential applications

The applications in medical, legal, or financial systems can bring both substantial benefits and harms. A well-documented case is the flawed Google Flu trends, which was designed to predict flu outbreaks, enabling public health officials to assign resources more effectively.

A harmful attitude among programmers called “algorithmic hubris” suggests that some programmers have unreasonable expectations of their capacity to create foolproof autonomous systems.

The crashes in stock markets and currency exchanges caused by high-frequency trading algorithms triggered losses of billions of dollars in just a few minutes. But with adequate logging of trades, market managers can often repair the damage.

Life-critical systems

Moving on to the challenges of life-critical applications, we find physical devices like self-driving cars, pacemakers, and implantable defibrillators, as well as complex systems such as military, industrial, or aviation applications. These require rapid actions and may have irreversible consequences.

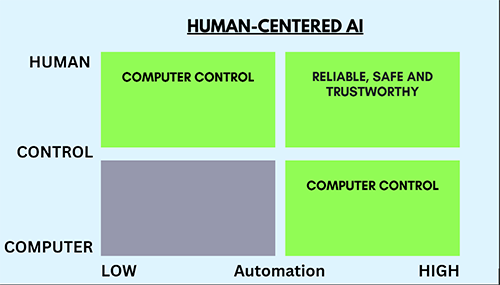

One-dimensional thinking suggests that designers must choose between human control and computer automation for these applications. The implicit message is that more automation means less control. The decoupling of this concept leads to a two-dimensional HCAI framework.

Two-Dimensional HCAI

Two-dimensional HCAI suggests that achieving high levels of both human control and automation is possible, shown in Figure 3.

Figure 3. Two-dimensional HCAI.

The goal is most often but not always to be at the upper-right quadrant. Most remote sensing technology systems are on the right side.

The message for designers from two-dimensional HCAI is that, for certain tasks, there is a value in full computer control or full human mastery. However, the challenge is to develop an effective and proven design, supported by trusted social structure, reliable practices, and cultures of safety so as to acquire RST recognition.

Conclusion

To conclude, artificial intelligence is not a threat to humankind if we involve it into our lives as a companion and learn to work alongside it. There are several ways that we can teach AI systems to accompany us humans in our tasks and make them understand us. We can also create a system with multiple AI models that argue over decisions and then seek human supervision on every disagreement.

The idea of two-dimensional human-centered artificial intelligence is making the vision of HCAI more clear for designers and developers and is redefining the challenge in a new way. Designers need to create trustable, safe, and reliable HCAI systems supported by cultures of safety so as to acquire technical recognition.

That said, nobody is perfect, neither artificial intelligence nor humans. But we can join hands and provide something enriching to each other. AI is the future, and the future is beautiful.

Additional Resources

1.

2.

3.

4. Human-Centered Artificial Intelligence: Reliable, Sage & Trustworthy, Ben Shneiderman, [email protected], Draft February 23, 2020, version 29 University of Maryland, College Park, MD

Posted in: on Tue, May 09, 2023 - 3:06:00

Chameera De Silva

View All Chameera De Silva's Posts

Thilina Halloluwa

View All Thilina Halloluwa's Posts

Post Comment

@Angel17 (2024 06 04)

Such an awesome post. Keep us updated! water heater repair seattle wa

@Joan Naylor (2024 06 26)

It is imperative to find a balance that protects strands of user privacy while still delivering the benefits of personalized AI experiences.

@Donald Hobbs (2024 07 02)

This is a fascinating read! The progress of AI is truly remarkable, and it’s amazing to think about how much more integrated and intelligent these systems will become with the advent of Web 3.0.

From: hill climb racing

@Fay R Crider (2024 07 22)

Human-centered artificial intelligence: The solution to fear of AI is a great post and I saw the best ideas that are providing us the solutions that we need. When I got the right image source I found the people who are providing us the right solutions that we need.

@Larry Hamil (2024 07 22)

Thanks for sharing this post about the solution to fear of AI with these amazing videos and the detail is perfect for learning. We can find the best solutions that we need. Also from the basildontreesurgeon.co.uk/ I saw the best services that helps us to handle the issues related to trees. Such services are good to use as they provide great results.

@Assa Wane (2024 07 26)

Have you ever played a game against a computer? Have you ever wondered how computers win complex games against well-experienced players, such as when an AI system from IBM defeated Garry Kasparov in the Chinese board game Go?

The fear of AI becoming the biggest threat to humankind, replacing the human workforce on a large scale is a real concern for AI practitioners and businesses. But there have been incidents where the combination of a smart AI system supervised by humans has resulted in unbelievable achievements. Technology has developed magnificently in the past decade, and the widespread adoption of smart systems powered by AI predicts a much smarter geometry dash and tech-dependent world. New-age AI systems will become more and more learning-based. The massive data generated by the Internet will be used to train these models and systems to improve their intelligence in specifically focused areas.

@jeffreestar (2024 08 01)

AI need not be a threat if integrated that’s not my neighbor thoughtfully with human oversight. By combining the strengths of AI with human intelligence, we can create systems that are both powerful and trustworthy.

@Cecil Sharp (2024 08 28)

You should keep up the fine job. After reading a couple of the entries of your website baldi’s basics, I have come to the conclusion that your blog is not only really intriguing but also has a lot of wonderful information.

@JeffD (2024 09 04)

Hello! Human-centered artificial intelligence is emerging as a key solution to alleviate fears surrounding AI. By prioritizing ethical considerations, transparency, and user-centric design, this approach ensures that AI systems are developed with human well-being at the forefront.

@Free AI Tools Free AI Tools (2024 12 16)

Thanks for this article, it is well written Free AI Tools

Latest Merch Deals

Remote Software Jobs Thank you for sharing…

@Alie11 (2024 12 29)

Human-Centered AI is an approach to designing and deploying AI systems with the primary focus on improving word hurdle human well-being

@bevisandrew (2025 03 31)

This article presents a compelling view on the importance of human-centered AI in alleviating fears surrounding artificial intelligence. The idea of integrating human oversight to enhance AI decision-making resonates with my experiences, much like how I found balance and engagement through block breaker. Emphasizing collaboration between humans and AI could indeed pave the way for safer and more ethical advancements in technology. Looking forward to seeing how HCAI evolves!

@kendrey (2025 04 03)

KCMO Stucco Repair is a reputable company that specializes in stucco installation, repair, and maintenance. With their expertise in stucco application, they provide reliable and economical repair solutions that are suitable for all kinds of homes. If you are looking for a reliable stucco contractor in Kansas City, KCMO Stucco Repair is the perfect choice. stucco contractors .

@s (2025 04 17)

Closed-loop electrolyte purification systems recover 97% of process water while meeting ISO 14001 environmental standards. Our Electrolytic Copper Cleaning solutions eliminate chemical waste through patented filtration technology, reducing environmental impact by 68%.

@Survival race (2025 04 28)

This presentation was great. I will watch more survival race.

@mcdvoice (2025 07 31)

This website is amazing, all blogs are very useful to us. mcdvoice

@planet clicker (2025 09 17)

This is such a fascinating take on AI! It’s wild to think about AI beating Kasparov. It makes you wonder about the range of games out there, from those complex ones to something more chill like a planet clicker game. Great read!

@How Old Do I Look (2025 09 17)

This post on AI in games is super interesting! It’s wild how computers can beat grandmasters. It makes you wonder what else AI can figure out. Like, could an AI tell me How Old Do I Look just from a photo? The possibilities are endless!

@Doll Generator (2025 09 17)

It really highlights AI’s versatility. It’s amazing how AI is showing up everywhere now, not just in games. For example, there’s even a Doll Generator that creates custom doll images from text descriptions. So neat!

@emoji my face (2025 09 17)

This blog about AI winning complex games like Go is so cool! It’s incredible how far AI has come. Speaking of AI, it’s also amazing how it’s used for creative things, like when I use it to emoji my face and turn my photos into fun art. So many applications!