Authors: Maliheh Ghajargar, Jeffrey Bardzell, Allison Smith Renner, Peter Gall Krogh, Kristina Höök, Mikael Wiberg

Posted: Tue, February 15, 2022 - 4:11:15

Computational systems are becoming increasingly smart and automated. Artificial intelligence (AI) systems perceive things in the world, produce content, make decisions for and about us, and serve as emotional companions. From music recommendations to higher-stakes scenarios such as policy decisions, drone-based warfare, and automated driving directions, automated systems affect us all.

But researchers and other experts are asking, How well do we understand this alien intelligence? If even AI developers don’t fully understand how their own neural networks make decisions, what chance does the public have to understand AI outcomes? For example, AI systems decide whether a person should get a loan; so what should—what can—that person understand about how the decision was made? And if we can’t understand it, how can any of us trust AI?

The emerging area of explainable AI (XAI) addresses these issues by helping to disclose how an AI system arrives at its outcomes. But the nature of the disclosure depends in part on the audience, or who needs to understand the AI. A car, for example, can send warnings to consumers (“Tire Pressure Low”) and also send highly technical diagnostic codes that only trained mechanics can understand. Explanation modality is also important to consider. Some people might prefer spoken explanations compared to visual ones. Physical forms afford natural interaction with some smart systems, like vehicles and vacuums, but whether tangible interaction can support AI explanation has not yet been explored.

In the summer of 2020, a group of multidisciplinary researchers collaborated on a studio proposal for the 2021 ACM Tangible Embodied and Embedded (TEI) conference. The basic idea was to link conversations about tangible and embodied interaction and product semantics to XAI. Here, we first describe the background and motivation for the workshop and then report on its outcomes and offer some discussion points.

Self-explanatory or explainable AI?

Explainable AI (XAI) explores mechanisms for demonstrating how and why intelligent systems make predictions or perform actions to help people to understand, trust, and use these systems appropriately [1]. Importantly, people need to know more about systems than just their accuracy or other performance measures; they need to understand more fully how systems make predictions, where they work well, and also their limitations. This understanding helps to increase our trust in AI, ensuring us they are operating without bias. XAI methods have another benefit: supporting people in improving systems by making them more aware of how and when they err. Yet XAI is not always a perfect solution. Trust is particularly hard to calibrate; AI sometimes cannot be trusted; and AI explanations may result in either over- or under-reliance on systems, which might promote manipulations and managerial control.

The notion of explanations or self-explanatory forms has also had a long tradition in product and industrial design disciplines. Product semantics is the study of how people make sense of and understand products through their forms; hence, the way products can be self-explanatory [2]. From that perspective, a product explains its functionality and meaning by its physical forms and context of use alone. For example, the large dial on a car stereo not only communicates that the volume can be controlled, but also how to do so. In this view, products do not need to explain themselves, though their forms need to be understandable in principle.

Using a product-semantic perspective for XAI builds upon the general concerns of XAI, but focuses on the user experience and understandability of a given AI system based on user interpretations of its material and formal properties [3]. The dominant interaction modalities, however, do not fully allow that experience; hence, we have decided to explore tangible embodied interaction (TEI) as a promising interaction modality for that purpose.

From explainable AI to graspable AI: A studio at ACM TEI 2021

At ACM TEI 2021, we organized a studio (similar to a workshop) to map the opportunities that TEI in its broadest sense, including Hiroshi Ishii and Brygg Ullmer’s tangible user interfaces, Paul Dourish’s embodied interaction, Kristina Höök’s somaesthetics, and Mikael Wiberg’s materiality of interaction, can offer to XAI systems.

We used the phrase graspable AI, which deliberately plays with two senses of the word grasp, one referring to taking something into one’s hand and the other to when the mind “grasps” an idea. The term graspable inherently conveys the meaning of being understandable intellectually, meaningfully, and physically.

In doing so, we referenced two earlier HCI concepts. In 1983, Ben Shneiderman coined the term direct manipulation as a way to “offer the satisfying experience of operating on visible objects. The computer becomes transparent, and users can concentrate on their tasks” [4]. In 1997, Ishii and Ullmer envisioned tangible bits, a vision of human-computer interaction that allows users to grasp and manipulate bits by coupling the bits with everyday physical objects and architectural surfaces, which was preceded by George Fitzmaurice’s Ph.D. thesis Graspable User Interfaces in 1996. Their goal was to create a link between digital and physical spaces [5,6,7].

Building on these ideas, we viewed graspable AI as a way to approach XAI from the perspective of tangible and embodied interaction, pointing to a product that is not only explainable but also coherent and accessible in a unified tactile and haptic form [8].

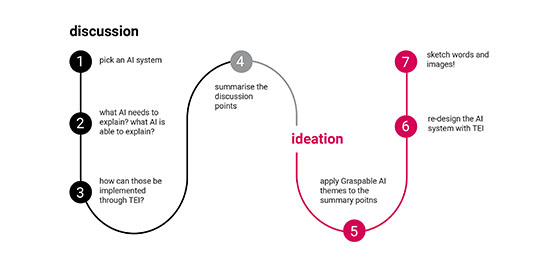

Beyond presentations of position papers, studio participants engaged in group activities, which consisted of three phases (Figure 1). First, each group chose an everyday use AI object or system, analyzed and explored its interactions regarding explainability (what needs to be explained and what the system can explain), then ideated possible tangible interactions with the system and redesigned the human-AI interaction using TEI.

Figure 1. TEI studio structure and process.

Graspable designs for everyday use

Studio participants split into groups to explore graspable designs for three distinct AI systems for everyday use: movie recommendations, self-tracking, and robotic cleaning devices.

One group explored graspable design for movie recommendations, asking questions about their explainability and how users can interact with the system through a tangible interface. Some ideas that emerged during the discussion and ideation phases were speculations around using a stress-ball form as a tangible interface to the recommendation system to influence recommendations based on the user’s mood (Figure 2).

Figure 2. Stress ball as a TUI for movie recommendation system.

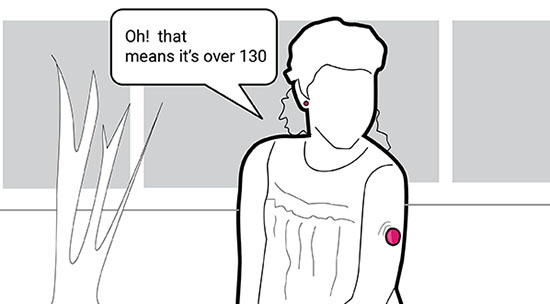

Another group worked on AI self-tracking devices, discussing questions of how an AI self-tracking device can learn from the user interactions and make us “feel” that our blood sugar is high or low. Provocative ideas, such as an eye that loses its sight gradually and a system that learns and informs the user through haptic feedback, were also explored (Figure 3).

Figure 3. Tangible AI self-tracking device.

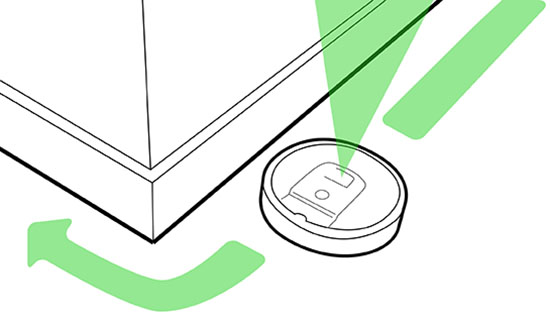

The third group focused on robotic cleaners, where they discussed the opportunities of tangible interactions to inform users about and anticipate robots’ movements. Making the robot’s intended movement visible was another idea that led the group to ideate a tangible map and a map that uses spatial image projections (Figure 4).

Figure 4. Robotic vacuum cleaner spatial interactions.

Discussion

Aesthetic accounts often unfold as a back and forth between material particulars and interpreted wholes [3]. Further such accounts consider the difference between what are called explanations in science and interpretations in humanities and design. While explanation is used to find an answer to the why of a phenomena and to reduce its complexity to manageable and understandable units, interpretation is a way to make sense of an experience and the how of the formal and material properties of an artifact within its sociocultural context [3].

AI systems vary in their complexity; some are easier to explain (e.g., movie recommendation systems) while others are more difficult to understand because of their complex inner reasoning (e.g., natural language understanding by deep learning). Accordingly, the notion of graspable AI can not only be concerned with explanations, but also with how AIs are experienced and interpreted through their material particularities within their sociocultural context. However, we do not mean to suggest that graspable AI is a perfect solution to all fundamental humanities concerns! In this workshop, we explored TEI as an explanation modality. We found out that while it has some potential for improving the understandability of the system, it comes with its own challenges. For example, there are features and functionalities of AI systems that do not need to be tangible, or that are understandable as they are. It has been also challenging to design tangible AI systems without falling into usual categories of smart objects.

Hence, we conclude this post discussing a set of related themes and challenges inherent to AI systems that fruitfully may be approached through the notion of graspable: growth, unpredictability, and intentionality.

Graspable growth

AI systems are intended to learn over time as a continuous and internal process based on the data inputs. Hence, an algorithm metaphorically grows over time. As much as an AI system grows, its functionalities and predictions are expected to improve as well, though devolution is as possible as evolution. But humans are not always aware of that process of learning and growing. Probably the most intuitive form of growth for humans are biological growths. We naturally and intuitively can understand when a plant grows new leaves.

We suggest that metaphors of biological growth (and decay) can inspire the design of XAI product semantics to make the AI learning process self-explanatory.

Graspable unpredictability

AI systems are sometimes unpredictable for humans. The decisions they make based on their inner workings and logic may not appear entirely reasonable, fair, or understandable for humans. Further, such systems are ever changing based on input data, especially if they learn over time. How might an AI decrease its apparent unpredictability by revealing its decisions and purposes through tangible and embodied interactions?

For instance, a robotic cleaner maps the area it is cleaning, and its movement decisions are made based on spatial interaction with the environment and continuous learning of the space. Can its mappings and anticipated movements be rendered in a way that humans can grasp? While the stakes could be low for automated consumer vacuum cleaners, implications for powerful robots used in manufacturing and surgery are more serious.

Graspable intentionality

AI systems have their own internal reasoning and logic and they make decisions based on them. Their internal logic and mechanisms make some AI systems appear to be independent in their decision-making process and therefore to have their own mind, intentions, and purposes. Whether or not this is true from a technical perspective, end users often experience it as such, for instance when an algorithmic hiring tool “discriminates.”

At stake is not only the technical question of how to design a more socially responsible AI, but also how AI developers might expose systems’ workings and intentions to humans by means of tangible and embodied interaction.

Conclusions

AI systems are becoming increasingly complex and intelligent. Their complexity and unpredictability pose important questions that are concerned with, on one hand, the degree to which end users actually can control them, and on the other hand, whether and how we can design them in a more transparent and responsible way. In the above we have brought forward some possible challenges and opportunities of using tangible and embodied interaction in designing AI systems that are understandable for human users.

Acknowledgment

We would like to thank workshop participants and our colleagues David Cuartielles and Laurens Boer for their valuable input and participation.

Endnotes

1. Preece, A. Asking ‘why’ in AI: Explainability of intelligent systems – perspectives and challenges. Intelligent Systems in Accounting, Finance and Management 25, 2 (2018), 63–72; https://doi.org/10.1002/isaf.1422

2. Krippendorff, K. and Butter, R. Product semantics: Exploring the symbolic qualities of form. Innovation 3, 2 (1984), 4–9.

3. Bardzell, J. 2011. Interaction criticism: An introduction to the practice. Interacting with Computers 23, 6 (Nov. 2011), 604–621; https://doi.org/10.1016/j.intcom.2011.07.001

4. Shneiderman 1983. Direct manipulation: A step beyond programming languages. Computer 16, 8 (Aug. 1983), 57–69; https://doi.org/10.1109/MC.1983.1654471

5. Fitzmaurice, G., Ishii, H., and Buxton, W. Bricks: Laying the foundations for graspable user interfaces. Proc. of the SIGCHI Conference on Human Factors in Computing Systems. ACM Press/Addison-Wesley Publishing Co., USA, 1995, 442–449; https://doi.org/10.1145/223904.223964

6. Fitzmaurice, G. Graspable User Interfaces. Ph.D. thesis. University of Toronto, 1996; https://www.dgp.toronto.edu/~gf/papers/PhD%20-%20Graspable%20UIs/Thesis.gf.html

7. Ishii, H. and Ullmer, B. Tangible bits: Towards seamless interfaces between people, bits and atoms. Proc. of the ACM SIGCHI Conference on Human Factors in Computing Systems. ACM, New York, 1997, 234–241.

8. Ghajargar, M., Bardzell, J., Renner, A.S., Krogh, P.G., Höök, K., Cuartielles, D., Boer, L., and Wiberg, M. From explainable AI to graspable AI. Proc. of the Fifteenth International Conference on Tangible, Embedded, and Embodied Interaction. ACM, New York, 2021, 1–4.

Posted in: on Tue, February 15, 2022 - 4:11:15

Maliheh Ghajargar

View All Maliheh Ghajargar's Posts

Jeffrey Bardzell

View All Jeffrey Bardzell's Posts

Allison Smith Renner

View All Allison Smith Renner's Posts

Peter Gall Krogh

View All Peter Gall Krogh's Posts

Kristina Höök

View All Kristina Höök's Posts

Mikael Wiberg

View All Mikael Wiberg's Posts

Post Comment

@Jason (2024 08 28)

This discussion on graspable AI is fascinating, especially when considering how the design of AI systems impacts user understanding and trust. Just as tangible interactions can help users “grasp” AI decision-making processes, having clear and accessible services in our everyday environments is crucial too. For instance, when it comes to home projects, finding reliable professionals is key. If you’re looking for concrete layers near me, I highly recommend checking out Hamilton Concrete Contractors. Their commitment to quality and transparency in their work aligns with the principles of understandable and trustworthy systems that this post highlights.

@Free AI Tools Free AI Tools (2024 12 16)

Thanks for this article, it is well written Remote Software JobsFree AI Tools

Latest Merch Deals Thank you for sharing,\

@Matthew Lee (2025 01 03)

Don’t forget to play solar smash to release your mind in your free time.

@raccing guy (2025 03 31)

traffic road is simple yet highly engaging, with just the right amount of difficulty that keeps you coming back for more as you try to beat your best score.

@BethanyDamian (2025 07 22)

Working with Tangible XAI has changed how I approach AI transparency. I appreciate how it turns abstract models into understandable, interactive visuals. Being able cryptocurrency customer service to see and question the decision-making process has improved trust and collaboration across teams. It’s a powerful tool for making AI truly accountable and human-centered.

@five nights at freddy's 2 (2025 07 23)

Get ready to return to the pizzeria — because Five Nights at Freddy’s 2 is coming to the big screen! Building on the chilling legacy of the first film, this sequel dives deeper into the twisted world of Freddy Fazbear and the haunted animatronics fans first feared in the iconic game FNaF 2. With more animatronics, more lore, and even darker secrets, both the movie and the original game will leave you questioning what really happens after midnight. Don’t blink… and don’t forget your Freddy mask.

@bbo-bop (2025 07 23)

The progression through the nights in five nights at freddy’s 2 gives a real sense of accomplishment as you outsmart the animatronics.

@Dennis (2025 09 18)

ome people might prefer spoken explanations compared to visual ones. Physical forms afford natural interaction surgeky with some smart systems