Authors: Giulio Jacucci

Posted: Mon, March 20, 2017 - 3:47:26

I first encountered VR in the late 90s, as a researcher looking at how it provided engineers and designers an environment for prototyping. After that I became more interested in looking at how to augment reality and our surrounding environment; however, I had the impression that although VR had been around for many decades by that point, there were many aspects, in particular from an interaction point of view, that I felt still deserved investigation. VR has gained renewed interest thanks to the recent proliferation of consumer products, content, and applications. This is accompanied by unprecedented interest and knowledge by consumers and by the maturity of VR, now considered less and less to be hype and to be more market ready. However, important challenges remain, associated with dizziness and the limits of current wearable displays, as well as interaction techniques. Despite these limitations, application fields are flourishing in training, therapy, and well-being beyond the more traditional VR fields of games and military applications.

One of the most ambitious research goals for interactive systems is to be able to recognize and influence emotions. Affect plays an important role in all we do and is an essential aspect of social interaction. Studying affective social interaction in VR can be important to the above-mentioned fields to support mediated communication. For example, in mental or psychological disorders VR can be used for interventions and training to monitor patient engagement and emotional responses to stimuli, providing feedback and correction on particular behaviors. Moreover, VR is increasingly accepted as a research platform in psychology, social science, and neuroscience as an environment that helps introduce contextual and dynamic factors in a controllable way. In such disciplines, affect recognition and synthesis are important aspects of numerous investigated phenomena.

Multimodal synthetic affect in VR

In social interaction, emotions can be expressed in a variety of ways, including gestures, posture, facial expressions, speech and its acoustic features, and touch. Our sense of touch plays a large role in establishing how we feel and act toward another person, considering, for example, hostility, nurturance, dependence, and affiliation.

Having done work on physiological and affective computing and haptics separately, I saw a unique way to combine these techniques to develop synthetic affect in VR, combining different modalities. For example, the emotional interpretation of touch can vary depending on cues from other modalities that provide a social context. Facial expressions have been found to modulate the touch perception and post-touch orienting response. Such multimodal affect stimuli are perceived differently according to individual differences of gender and behavioral inhibition. For example, behavioral inhibition sensitivity in males was associated with stronger affective touch perception.

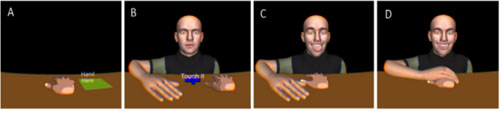

Taking facial expressions and touch as modalities for affective interaction, we can uncover different issues in their production. Currently emotional expression on avatars can be produced using off-the-shelf software that analyzes the facial movements of an actor modeling basic expressions, head orientation, and eye gaze. Subsequently the descriptions are used to animate virtual characters. Emotional expressions in avatars are often the result of a process involving several steps that ensure these relate to intended emotions. These are recorded first by capturing the live presentation from a professional actor using a facial-tracking software that also animates a virtual character. Expressions can then be manually adjusted to last exactly the same time and end with a neutral expression. Different animations are created for each distinct emotion type. The expressions can then be validated by measuring the recognition accuracy of participants who watch and classify the animations. This process works well to customize the facial expression to the intended use in replicable experiments. But this is resource intensive and does not scale well for other uses where facial expression might need to be generated in greater variations (for expressing nuances) or for generalizability, since every expression is unique. While mediated touch has been proven to affect emotion, behavior research into the deployability, resolution, and fidelity of haptics is ongoing. However, in our recent studies, we compared several techniques to simulate a virtual hand of a character touching the hand of a participant.

Emotion tracking is more challenging in the case of a wearable VR headset, as facial expressions cannot be easily tracked through recent computer vision software. Physiological sensors can be used to recognize change in psychophysiological states or to assess emotional responses to particular stimuli. Physiological sensors are also being integrated into more and more off-the-shelf consumer products, as in the case of EDA (electrodermal activity) or EEG. While EDA provides a ways to track arousal among other states and is easy to use in an unobtrusive way, it is suited for changes in the order of minutes, and not suited for timely sensitive events in seconds and milliseconds. EEG, on the other hand, increasingly provided in commercial devices, is better suited for timely precise measurements. For example, the study of how emotional expressions modulate the processing of touch can be done by event-related potential (ERP) in EEG resulting from touch. Studies show that the use of EEG is compatible with commonly available HMDs.

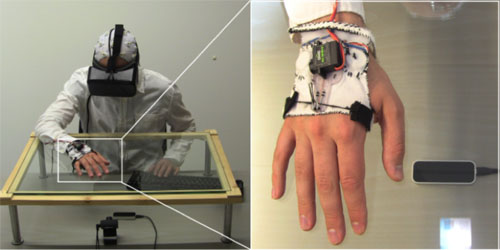

Eye tracking, which recently appeared commercially to be used inside HMDs, can be used both to identify whether users attend to a particular stimulus to track its emotional response, and to track psychophysiological phenomena such as cognitive load and arousal. As an example, the setup in Figure 1 includes VR, haptics, and physiological sensors. It can be used to simulate a social interaction at a table where mediated multimodal affect can be studied while an avatar touches the user’s hand, at the same time delivering a facial expression. The user recognizes the virtual hand in Figure 1A as her own hand as it is synchronized in real time.

Figure 1. Bringing it all together: hand tracking of the user through a glass. Wearable haptics, an EEG cap, and an HMD for VR allow the simulation of a situation in which a person sitting in front of the user touches her hand while showing different facial expressions.

This setup can be used for a number of training, entertainment, or therapy uses. For example, a recent product applies VR for treating anxiety patients. Recent studies have evaluated the impact in training autism spectrum disorder patients to apply this to dealing with anxiety. In our own recent study we used the same setup for an Air hockey game. The haptics simulated the hitting of the puck and the emotional expression of the avatar allowed us to study effects on players’ performance and experience of the game.

Future steps: From research challenges to applications

VR devices, applications, and content are emerging fast. An important feature in the future will be the affective capability of the environment, including the recognition and synthesis of emotions.

A variety of research challenges exist for affective interaction:

- Techniques in recognizing emotions of users from easily deployable sensors including the fusion of signals. Physiological computing is advancing fast in research and in commercial products; the recent success of vision-based solutions that track facial expression might soon be replicated by physiological-based sensors.

- Synthesis of affect utilizing multiple modalities, as exemplified here. For example, combining touch and facial expression, but considering also speech and its acoustic features and other nonverbal cues. How to ensure that these multimodal expressions are generally valid and can be generated uniquely.

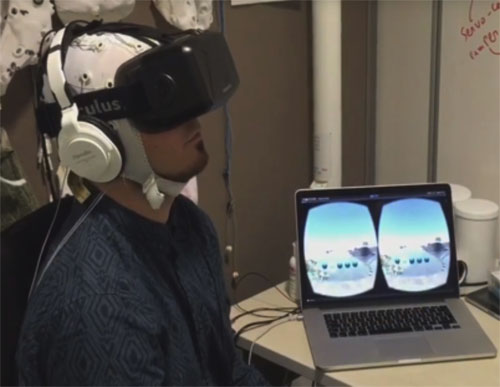

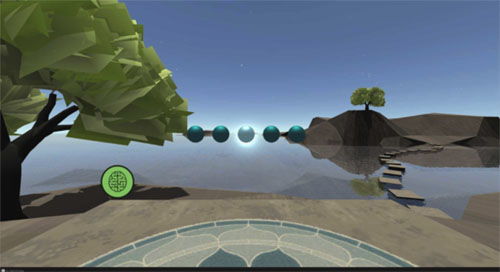

Figure 2. RelaWorld using VR and physiological sensors (EEG) for a neuroadaptive meditation environment.

While these challenges are hard, the potential application fields are numerous and replete with emerging evidence of their relevance:

- Training in, for example, emergency or disaster situations but in principle in any setting where a learner needs to simulate a task in an environment where she needs to attend to numerous features and social interaction.

- In certain training situations, affective capabilities are essential to carrying out the task, such as in therapy, which can be more physical, as in limb injuries, or more mental, as in autism disorder and social phobias, or both, as in cases such as stroke rehabilitation. In several of these situations—for example, mental disorders such as autism, anxiety, and social phobias—the patient practices social interaction while monitoring how they recognize or respond to emotional situations.

- Wellbeing examples such as physical exercise and meditation (Figure 2). Affective interaction here can motivate physical exercise or monitor psychophysiological states such as engagement or relaxation.

Posted in: on Mon, March 20, 2017 - 3:47:26

Giulio Jacucci

View All Giulio Jacucci's Posts

Post Comment

@Nicholas Osinski (2025 05 28)

Each level has its own color tone and theme, creating a feeling of constant freshness even though the gameplay remains unchanged. In addition to bringing players good game quality, improving reflex skills, Geometry Dash also has a matching rhythm that creates an impressive point.