Authors: Mario Romero

Posted: Fri, February 16, 2018 - 12:02:48

This blog started as a response to the column What Are You Reading? in Interactions. While I have a number of books I wanted to discuss, the topic made me reflect on how I read. My reading mechanisms have evolved from working with blind people. I continue to read novels mostly with my eyes—but for research papers, I use my ears.

For many years now I have mostly read thesis drafts. In my role as supervisor and thesis examiner, I read approximately 30 master’s theses and five Ph.D. theses every year. I know what you may be thinking, but, yes, I actually read and review them. Furthermore, as a paper reviewer, I read approximately six papers every year. At an average of 10 thousand words per master’s thesis, 30 thousand per Ph.D. thesis, and 7 thousand per paper, that is about half a million words, or a paperback novel of roughly 1700 pages.

Now, mind you, I am a slow reader, even when the text is clear. Most of the theses I read are drafts that typically require several rounds of careful reading and editing. If the text is clear, I can read about 250 words per minute. At that rate, it takes me about 70 hours to do one pass through my annual review material.

My goal here is to share my experience in switching from reading with my eyes to reading with my ears, and from editing with my fingers to editing with my voice. This switch has meant not just a significant boost to my throughput, but also an improvement in my focus and comprehension, and has allowed me to work in many more contexts. Paradoxically, perhaps the greatest benefit for me has been the ability to stay focused on the reading material while performing other physical activities, such as walking home from work. This blog outlines the methods that I have developed over the years and reflects on their pros and cons.

I begin with a short story. A few years ago, I ran a stop sign at the Georgia Tech campus and a policeman stopped me and summoned me to court. In court, I had the option to pay a $100 fine or perform community service. As a graduate student, I chose service. I signed up to volunteer at the Center for the Visually Impaired in Atlanta. During my week of service, I observed two blind teenagers in class not paying attention to their teacher. Rather, they were giggling at a mysterious device. It had a shoulder strap and it rested on the hip of the girl. She pressed combinations of six buttons and ran her index finger across a stripe at the bottom. She giggled and shared the experience with her friend, who also giggled as he ran his fingers across the device. I was bedazzled. What was producing this strong emotional response?

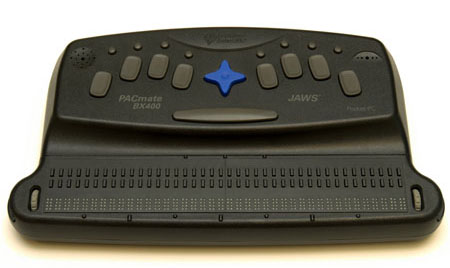

A typical portable braille writer and reader, the Freedom Scientific PACmate, which we used in our user studies. Note the buttons on top allow for chorded input and the pins at the bottom push up and down for a dynamic “refreshable” braille text output. (Image CC BY Mario Romero 2011)

I discovered they were using a portable braille notetaker, which includes a six-key chorded keyboard and a refreshable braille display, the stripe they were reading with their fingertips. After that encounter, my colleagues and I started researching how blind people enter and read text on mobile devices. We discovered that the device the teens were using was called BrailleNote. It cost a sizeable $6,000 USD.

We also determined that smartphones were, ironically, a much cheaper alternative. Although smartphones do not have braille displays, they can use voice synthesis to read out screen content. The user places a finger on the screen and the reader voices the target, a very efficient method to read out information. Unfortunately, inputting text is not as simple. While many users speak to their phones using speech-recognition technology, they cannot do so under many conditions, particularly in public environments where privacy concerns and ambient noise render the task impractical. In our research, we developed a two-hand method for entering text using braille code called BrailleTouch [1]. Apple’s iOS has since incorporated a similar method of text input.

A participant in our study using BrailleTouch. (Image CC BY Mario Romero 2011)

What we did not expect was the discovery of the extraordinary ability of people with experience using screen readers to listen to text at tremendous speeds. We observed participants using screen readers at speeds which we could not comprehend whatsoever. Participants reassured us they understood and that it was a skill they acquired through simple practice—nothing superhuman about it.

That realization sent me down the path of using my hearing for reading. I started slow, first learning to enable the accessibility apps on my iPhone. Then I learned to control the speed. I started at about 50%, or 250 wpm. I could understand at that rate, which happened to be my eye-reading rate. I downloaded PDF versions of my students’ theses into my phone and tablet and read using VoiceOver [2]. Unfortunately it did not work as well as I expected. The reader did not flow on its own, stopping over links, paragraphs, and pages. I had to push it along by swiping.

After some research, I discovered an app called vBookz PDF Voice Reader. I uploaded my students’ theses in PDF format and started to speed things along. After a few months I was reading at 400 wpm. The app also shows a visual marker of where you are on the text, so you can follow it with your eyes. After about a year, I was reading at full speed, 500 wpm with full comprehension. More importantly, I no longer had to follow with my eyes. I could, for example, walk during reading and see where I was going and remain fully focused on the text. Today there are myriad text-to-speech reading apps and I encourage you to explore them to find the one that is most fitting.

This newfound ability has meant a dramatic change in my reviewing practices. Yet it is not the whole picture. There are more practices I have explored and there are important limitations as well. I started dictating feedback on my phone. I stopped typing and started using Siri to provide comments and corrections. The upside is that I can continue to stay focused on both reading and writing while remaining physically active. The downside is that I need to verbally state the context of the feedback, as in “paragraph 3, sentence 2.” Nevertheless, I find it liberating and efficient.

What are these other important limitations? First, the text-to-speech reader voices everything: page numbers, URLs, footnotes, image names, citations. It even states “bullet point” for the dot initiating bullet points. Most of these landmarks are distracting and I now know to disregard them when reading with my eyes. Second, tables and graphs are a nightmare to read with my ears. I have to stop the reader and use my eyes when I reach text with a non-linear structure. Third, figures are only legible as far as their meta-description allows it, and even in that case it is better to use my eyes. Fourth, and last, I have to use headphones. Otherwise, the echo from the device’s speakers coupled with ambient noise renders high-speed reading impossible for me.

Despite these limitations, which are current research topics in eyes-free reading, I find myself fortunate enough to have recruited participants who had the generosity and sense of pride to share their expertise. I am a better research supervisor for that. Yet, for all the boost in speed and comprehension I get from ear reading, I still enjoy reading novels with my eyes and listening to audiobooks read by human actors at normal speed. My mind’s voice and that of other humans remains my preferred method for pleasure reading. Perhaps in a few years voice synthesizers will become so human that they pass this version of the Turing test.

Endnotes

1. Southern, Caleb, James Clawson, Brian Frey, Gregory Abowd, and Mario Romero. "An evaluation of BrailleTouch: mobile touchscreen text entry for the visually impaired." In Proceedings of the 14th international conference on Human-computer interaction with mobile devices and services, pp. 317-326. ACM, 2012.

2. https://help.apple.com/voiceover/info/guide/10.12/

Posted in: on Fri, February 16, 2018 - 12:02:48

Mario Romero

View All Mario Romero's Posts

Post Comment

@ragdoll hit (2025 05 05)

I totally relate to what you’re saying. Text-to-speech can be really helpful, but it definitely comes with its own set of challenges. The part about it reading out every little detail like “bullet point” or URLs—yeah, that gets old fast. It’s like your brain learns to filter out the clutter, which is kind of wild in itself. ragdoll hit

@karinaaespa (2025 05 16)

Italian Brainrot Clicker takes the essence of internet memes and brings it to life through chaotic, click-based gameplay. Inspired by the viral Italian Brainrot trend, this game celebrates nonsensical humor, quirky characters, and all the absurdity that made 2024-2025 a year of meme-driven madness.

@RubyLee (2025 05 22)

What a beautiful way to describe learning—“reading with your ears” truly captures the magic of music and listening. It makes me think of finding the perfect renaissance lute for sale, an instrument that brings stories and emotions to life through sound.

@Micheal (2025 07 26)

As you cast your line and wait for the next big bite, surround yourself with the relaxing sounds of nature while tiny fishing game.

@5 minute timer (2025 07 29)

“Reading with ears” through audiobooks or podcasts makes literature accessible and multitask-friendly, blending storytelling with modern convenience. It’s a testament to how technology adapts to our evolving ways of learning and enjoying narratives.

@5 minute timer (2025 07 29)

The story from Georgia Tech made your perspective even more powerful—showing how engaging alternative sensory methods can unlock focus in unexpected situations. 5 minute timer

@Shida (2025 09 01)

I really enjoyed reading this reflection on shifting from reading with your eyes to reading with your ears—it’s fascinating how technology and accessibility tools can reshape not only how we consume information but also how we focus. Your story about the blind teenagers with the BrailleNote was especially moving. It made me think of how I sometimes train my own focus with sudoku puzzles: at first distracting, but then everything clicks once I adjust to a new way of processing.

@Bebder Game (2025 09 12)

The quirky humor in Bebder Game makes every dialogue worth reading—it’s both sharp and silly.